Ev ve Ofis taşıma sektöründe lider olmak.Teknolojiyi klrd takip ederek bunu müşteri menuniyeti amacı için kullanmak.Sektörde marka olmak.

İstanbul evden eve nakliyat

Misyonumuz sayesinde edindiğimiz müşteri memnuniyeti ve güven ile müşterilerimizin bizi tavsiye etmelerini sağlamak.

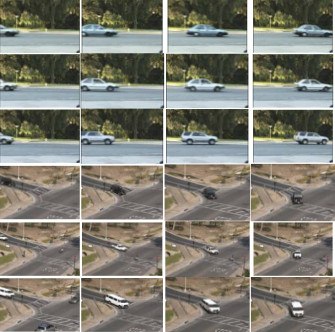

Pedestrian tracking with crowd simulation models in a multi-camera system

.png) In this paper, a multi-camera tracking system with integrated crowd simulation is proposed in order to explore the possibility to make homography information more helpful. Two crowd simulators with different simulation strategies are used to investigate the influence of the simulation strategy on the final tracking performance. The performance is evaluated by multiple object tracking precision and accuracy (MOTP and MOTA) metrics, for all the camera views and the results obtained under real-world coordinates. The experimental results demonstrate that crowd simulators boost the tracking performance significantly, especially for crowded scenes with higher density.

In this paper, a multi-camera tracking system with integrated crowd simulation is proposed in order to explore the possibility to make homography information more helpful. Two crowd simulators with different simulation strategies are used to investigate the influence of the simulation strategy on the final tracking performance. The performance is evaluated by multiple object tracking precision and accuracy (MOTP and MOTA) metrics, for all the camera views and the results obtained under real-world coordinates. The experimental results demonstrate that crowd simulators boost the tracking performance significantly, especially for crowded scenes with higher density.

Novel Representation for Driver Emotion Recognition in Motor Vehicle Videos

.png) A novel feature representation of human facial expressions for emotion recognition is developed. The representation leveraged the background texture removal ability of Anisotropic Inhibited Gabor Filtering (AIGF) with the compact representation of spatiotemporal local binary patterns. The emotion recognition system incorporated face detection and registration followed by the proposed feature representation: Local Anisotropic Inhibited Binary Patterns in Three Orthogonal Planes (LAIBP-TOP) and classification. The system is evaluated on videos from Motor Trend Magazine’s Best Driver Car of the Year 2014-2016. The results showed improved performance compared to other state-of-the-art feature representations.

A novel feature representation of human facial expressions for emotion recognition is developed. The representation leveraged the background texture removal ability of Anisotropic Inhibited Gabor Filtering (AIGF) with the compact representation of spatiotemporal local binary patterns. The emotion recognition system incorporated face detection and registration followed by the proposed feature representation: Local Anisotropic Inhibited Binary Patterns in Three Orthogonal Planes (LAIBP-TOP) and classification. The system is evaluated on videos from Motor Trend Magazine’s Best Driver Car of the Year 2014-2016. The results showed improved performance compared to other state-of-the-art feature representations.

EDeN: Ensemble of deep networks for vehicle classification

.png) Traffic surveillance has always been a challenging task to automate. The main difficulties arise from the high variation of the vehicles appertaining to the same category, low resolution, changes in illumination and occlusions. Due to the lack of large labeled datasets, deep learning techniques still have not shown their full potential. In this paper, thanks to the MIOvision Traffic Camera Dataset (MIO-TCD), an Ensemble of Deep Networks (EDeN) is used to successfully classify surveillance images into eleven different classes of vehicles. The ensemble of deep networks consists of 2 individual networks that are trained independently. Experimental results show that the ensemble of networks gives better performance compared to individual networks and it is robust to noise. The ensemble of networks achieves an accuracy of 97.80%, mean precision of 94.39%, mean recall of 91.90% and Cohen kappa of 96.58.

Traffic surveillance has always been a challenging task to automate. The main difficulties arise from the high variation of the vehicles appertaining to the same category, low resolution, changes in illumination and occlusions. Due to the lack of large labeled datasets, deep learning techniques still have not shown their full potential. In this paper, thanks to the MIOvision Traffic Camera Dataset (MIO-TCD), an Ensemble of Deep Networks (EDeN) is used to successfully classify surveillance images into eleven different classes of vehicles. The ensemble of deep networks consists of 2 individual networks that are trained independently. Experimental results show that the ensemble of networks gives better performance compared to individual networks and it is robust to noise. The ensemble of networks achieves an accuracy of 97.80%, mean precision of 94.39%, mean recall of 91.90% and Cohen kappa of 96.58.

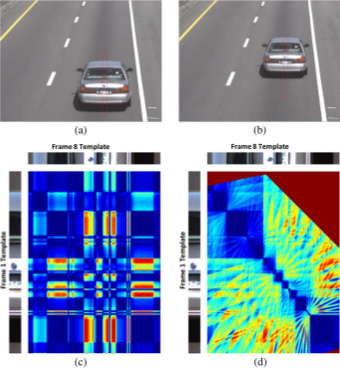

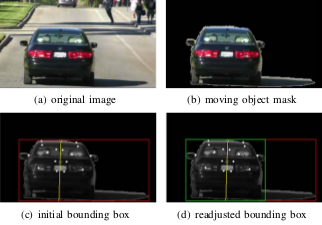

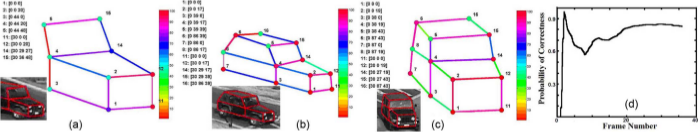

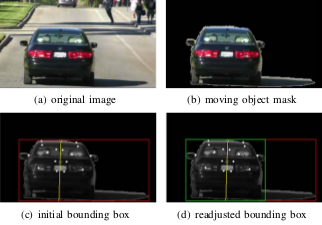

Robust visual rear ground clearance estimation and classification of a passenger vehicle

Computation of Visual Rear Ground Clearance of vehicles was an important computer vision application. This problem was challenging as the road and vehicle rear bumper may have subtle appearance differences, vehicle motion was on uneven surfaces and there were real-time considerations. A method is presented to compute the Visual Rear Ground Clearance of a vehicle from its rear view video and classify it into two classes; namely Low Visual Rear Ground Clearance Vehicles and High Visual Rear Ground Clearance Vehicles. A multi-frame matching technique in conjunction with geometry based constraints was developed. It detected Regions-of-Interest ROIs of moving vehicles and moving shadows, and used shape constraints associated with vehicle geometry as viewed from its rear. It tracked stable features on a vehicle to compute the Visual Rear Ground Clearance.

Computation of Visual Rear Ground Clearance of vehicles was an important computer vision application. This problem was challenging as the road and vehicle rear bumper may have subtle appearance differences, vehicle motion was on uneven surfaces and there were real-time considerations. A method is presented to compute the Visual Rear Ground Clearance of a vehicle from its rear view video and classify it into two classes; namely Low Visual Rear Ground Clearance Vehicles and High Visual Rear Ground Clearance Vehicles. A multi-frame matching technique in conjunction with geometry based constraints was developed. It detected Regions-of-Interest ROIs of moving vehicles and moving shadows, and used shape constraints associated with vehicle geometry as viewed from its rear. It tracked stable features on a vehicle to compute the Visual Rear Ground Clearance.

Multi-camera pedestrian tracking using group structure

.png) In this paper, we propose a novel multi-camera pedestrian tracking system, which incorporates a pedestrian grouping strategy and an online cross-camera model. The new cross-camera model is able to take the advantage of the information from all camera views as well as the group structure in the inference stage, and can be updated based on the learning approach from structured SVM. The experimental results demonstrate the improvement in tracking performance when grouping stage is integrated.

In this paper, we propose a novel multi-camera pedestrian tracking system, which incorporates a pedestrian grouping strategy and an online cross-camera model. The new cross-camera model is able to take the advantage of the information from all camera views as well as the group structure in the inference stage, and can be updated based on the learning approach from structured SVM. The experimental results demonstrate the improvement in tracking performance when grouping stage is integrated.

Efficient alignment for vehicle make and model recognition

.png) This paper presents a make and model recognition system for passenger vehicles. We propose a two-step efficient alignment mechanism to account for view point changes. The 2D alignment problem is solved as two separate one dimensional shortest path problems. To avoid the alignment of the query with the entire database, reference views are used. These views are generated iteratively from the database. To improve the alignment performance further, use of two references is proposed: a universal view and type specific showcase views. The query is aligned with universal view first and compared with the database to find the type of the query. Then the query is aligned with type specific showcase view and compared with the database to achieve the final make and model recognition. We report results on database of 1500 vehicles with more than 250 makes and models.

This paper presents a make and model recognition system for passenger vehicles. We propose a two-step efficient alignment mechanism to account for view point changes. The 2D alignment problem is solved as two separate one dimensional shortest path problems. To avoid the alignment of the query with the entire database, reference views are used. These views are generated iteratively from the database. To improve the alignment performance further, use of two references is proposed: a universal view and type specific showcase views. The query is aligned with universal view first and compared with the database to find the type of the query. Then the query is aligned with type specific showcase view and compared with the database to achieve the final make and model recognition. We report results on database of 1500 vehicles with more than 250 makes and models.

Soft biometrics integrated multi-target tracking

.png) In this paper, we present a soft biometrics based appearance model for multi-target tracking in a single camera. Tracklets, the short-term tracking results, are generated by linking detections in consecutive frames based on conservative constraints. Our goal is to “re-stitching” the adjacent tracklets that contain the same target so that robust long-term tracking results can be achieved. As the appearance of the same target may change greatly due to heavy occlusion, pose variations and changing lighting conditions, a discriminative appearance model is crucial for association-based tracking. Unlike most previous methods which simply use the similarity of color histograms or other low level features to construct the appearance model, we propose to use the fusion of soft biometrics generated from sub-tracklets to learn a discriminative appearance model in an online manner. Compared to low level features, soft biometrics are robust against appearance variation. The experimental results demonstrate that our method is robust and greatly improves the tracking performance over the state-of-the-art method.

In this paper, we present a soft biometrics based appearance model for multi-target tracking in a single camera. Tracklets, the short-term tracking results, are generated by linking detections in consecutive frames based on conservative constraints. Our goal is to “re-stitching” the adjacent tracklets that contain the same target so that robust long-term tracking results can be achieved. As the appearance of the same target may change greatly due to heavy occlusion, pose variations and changing lighting conditions, a discriminative appearance model is crucial for association-based tracking. Unlike most previous methods which simply use the similarity of color histograms or other low level features to construct the appearance model, we propose to use the fusion of soft biometrics generated from sub-tracklets to learn a discriminative appearance model in an online manner. Compared to low level features, soft biometrics are robust against appearance variation. The experimental results demonstrate that our method is robust and greatly improves the tracking performance over the state-of-the-art method.

An online learned elementary grouping model for multi-target tracking

.png) We introduce an online approach to learn possible elementary for inferring high level context that can be used to improve multi-target tracking in a data-association based framework. Unlike most existing association-based tracking approaches that use only low level information to build the affinity model and consider each target as an independent agent, we online learn social grouping behavior to provide additional information for producing more robust tracklets affinities. Social grouping behavior of pairwise targets is first learned from confident tracklets and encoded in a disjoint grouping graph. The grouping graph is further completed with the help of group tracking. The proposed method is efficient, handles group merge and split, and can be easily integrated into any basic affinity model. We evaluate our approach on two public datasets, and show significant improvements compared with state-of-the-art methods.

We introduce an online approach to learn possible elementary for inferring high level context that can be used to improve multi-target tracking in a data-association based framework. Unlike most existing association-based tracking approaches that use only low level information to build the affinity model and consider each target as an independent agent, we online learn social grouping behavior to provide additional information for producing more robust tracklets affinities. Social grouping behavior of pairwise targets is first learned from confident tracklets and encoded in a disjoint grouping graph. The grouping graph is further completed with the help of group tracking. The proposed method is efficient, handles group merge and split, and can be easily integrated into any basic affinity model. We evaluate our approach on two public datasets, and show significant improvements compared with state-of-the-art methods.

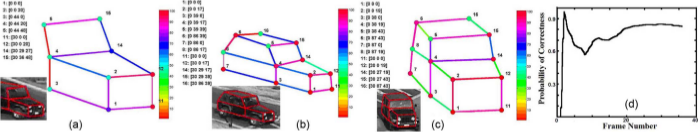

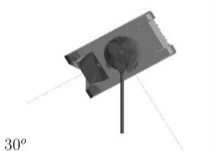

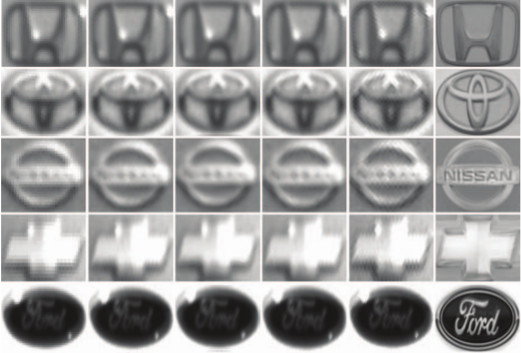

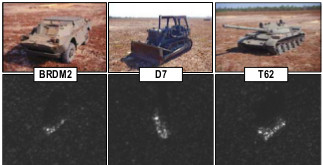

Three-Dimensional Vehicle Model Building From Video

Traffic videos often capture slowly changing views of moving vehicles.

We instead focus on 3-D model building vehicles with different shapes from a

generic 3-D vehicle model by accumulating evidences in streaming traffic videos collected

from a single camera. We propose a novel Bayesian graphical model (BGM), which is called

structure-modifiable adaptive reason-building temporal Bayesian graph (SmartBG), that models

uncertainty propagation in 3-D vehicle model building. Uncertainties are used as relative weights

to fuse evidences and to compute the overall reliability of the generated models.

Results from several traffic videos and two different view points demonstrate the performance of the method.

Traffic videos often capture slowly changing views of moving vehicles.

We instead focus on 3-D model building vehicles with different shapes from a

generic 3-D vehicle model by accumulating evidences in streaming traffic videos collected

from a single camera. We propose a novel Bayesian graphical model (BGM), which is called

structure-modifiable adaptive reason-building temporal Bayesian graph (SmartBG), that models

uncertainty propagation in 3-D vehicle model building. Uncertainties are used as relative weights

to fuse evidences and to compute the overall reliability of the generated models.

Results from several traffic videos and two different view points demonstrate the performance of the method.

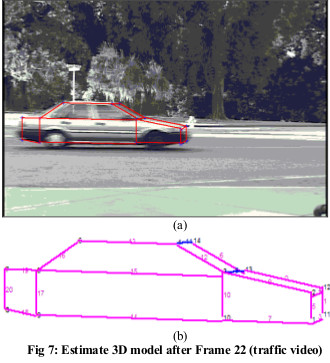

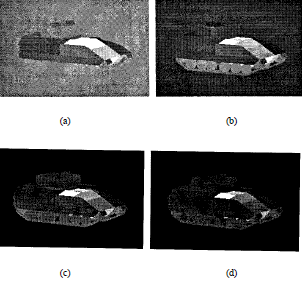

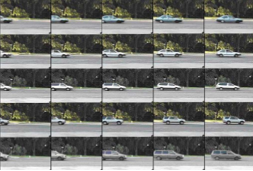

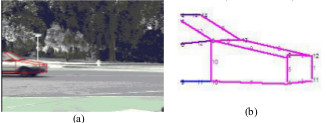

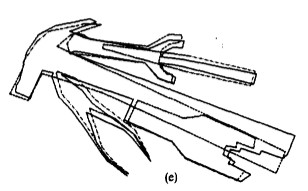

Structural Signatures for Passenger Vehicle Classification in Video

This research focuses on a challenging pattern recognition problem of significant industrial impact,

i.e., classifying vehicles from their rear videos as observed by a camera mounted on top of a highway

with vehicles travelling at high speed. To solve this problem, we present a novel feature called

structural signature. From a rear-view video, a structural signature recovers the vehicle side

profile information, which is crucial in its classification. We present a complete system

that computes structural

signatures and uses them for classification of passenger vehicles into sedans, pickups,

and minivans/sport utility vehicles in highway videos.

This research focuses on a challenging pattern recognition problem of significant industrial impact,

i.e., classifying vehicles from their rear videos as observed by a camera mounted on top of a highway

with vehicles travelling at high speed. To solve this problem, we present a novel feature called

structural signature. From a rear-view video, a structural signature recovers the vehicle side

profile information, which is crucial in its classification. We present a complete system

that computes structural

signatures and uses them for classification of passenger vehicles into sedans, pickups,

and minivans/sport utility vehicles in highway videos.

Context-aware reinforcement learning for re-identification in a video network

.png) Re-identification of people in a large camera network has gained popularity in recent years. The problem still remains challenging due to variations across cameras. A variety of techniques which concentrate on either features or matching have been proposed. Similar to majority of computer vision approaches, these techniques use fixed features and/or parameters. As the operating conditions of a vision system change, its performance deteriorates as fixed features and/or parameters are no longer suited for the new conditions. We propose to use context-aware reinforcement learning to handle this challenge. We capture the changing operating conditions through context and learn mapping between context and feature weights to improve the re-identification accuracy. The results are shown using videos from a camera network that consists of eight cameras.

Re-identification of people in a large camera network has gained popularity in recent years. The problem still remains challenging due to variations across cameras. A variety of techniques which concentrate on either features or matching have been proposed. Similar to majority of computer vision approaches, these techniques use fixed features and/or parameters. As the operating conditions of a vision system change, its performance deteriorates as fixed features and/or parameters are no longer suited for the new conditions. We propose to use context-aware reinforcement learning to handle this challenge. We capture the changing operating conditions through context and learn mapping between context and feature weights to improve the re-identification accuracy. The results are shown using videos from a camera network that consists of eight cameras.

Improving person re-identification by soft biometrics based re-ranking

.png) The problem of person re-identification is to recognize a target subject across non-overlapping distributed cameras at different times and locations. In a real-world scenario, person re-identification is challenging due to the dramatic changes in a subject’s appearance in terms of pose, illumination, background, and occlusion. Existing approaches either try to design robust features to identify a subject across different views or learn distance metrics to maximize the similarity between different views of the same person and minimize the similarity between different views of different persons. In this paper, we aim at improving the reidentification performance by reranking the returned results based on soft biometric attributes, such as gender, which can describe probe and gallery subjects at a higher level. During reranking, the soft biometric attributes are detected and attribute-based distance scores are calculated between pairs of images by using a regression model. These distance scores are used for reranking the initially returned matches. Experiments on a benchmark database with different baseline re-identification methods show that reranking improves the recognition accuracy by moving upwards the returned matches from gallery that share the same soft biometric attributes as the probe subject.

The problem of person re-identification is to recognize a target subject across non-overlapping distributed cameras at different times and locations. In a real-world scenario, person re-identification is challenging due to the dramatic changes in a subject’s appearance in terms of pose, illumination, background, and occlusion. Existing approaches either try to design robust features to identify a subject across different views or learn distance metrics to maximize the similarity between different views of the same person and minimize the similarity between different views of different persons. In this paper, we aim at improving the reidentification performance by reranking the returned results based on soft biometric attributes, such as gender, which can describe probe and gallery subjects at a higher level. During reranking, the soft biometric attributes are detected and attribute-based distance scores are calculated between pairs of images by using a regression model. These distance scores are used for reranking the initially returned matches. Experiments on a benchmark database with different baseline re-identification methods show that reranking improves the recognition accuracy by moving upwards the returned matches from gallery that share the same soft biometric attributes as the probe subject.

Reference set based appearance model for tracking across non-overlapping cameras

.png) Multi-target tracking in non-overlapping cameras is challenging due to the vast appearance change of the targets across camera views caused by variations in illumination conditions, poses, and camera imaging characteristics. Therefore, direct track association is difficult and prone to error. In this paper, we propose a novel reference set based appearance model to improve multi-target tracking in a network of nonoverlapping video cameras. Unlike previous work, a reference set is constructed for a pair of cameras, containing targets appearing in both camera views. For track association, instead of comparing the appearance of two targets in different camera views directly, they are compared to the reference set. The reference set acts as a basis to represent a target by measuring the similarity between the target and each of the individuals in the reference set. The effectiveness of the proposed method over the baseline models on challenging realworld multi-camera video data is validated by the experiments.

Multi-target tracking in non-overlapping cameras is challenging due to the vast appearance change of the targets across camera views caused by variations in illumination conditions, poses, and camera imaging characteristics. Therefore, direct track association is difficult and prone to error. In this paper, we propose a novel reference set based appearance model to improve multi-target tracking in a network of nonoverlapping video cameras. Unlike previous work, a reference set is constructed for a pair of cameras, containing targets appearing in both camera views. For track association, instead of comparing the appearance of two targets in different camera views directly, they are compared to the reference set. The reference set acts as a basis to represent a target by measuring the similarity between the target and each of the individuals in the reference set. The effectiveness of the proposed method over the baseline models on challenging realworld multi-camera video data is validated by the experiments.

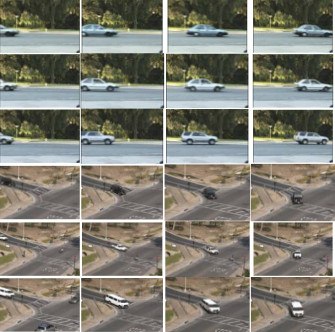

Single camera multi-person tracking based on crowd simulation

.png) Tracking individuals in video sequences, especially in crowded scenes, is still a challenging research topic in the area of pattern recognition and computer vision. However, current single camera tracking approaches are mostly based on visual features only. The novelty of the approach proposed in this paper is the integration of evidences from a crowd simulation algorithm into a pure vision based method. Based on a stateof-the-art tracking-by-detection method, the integration is achieved by evaluating particle weights with additional prediction of individual positions, which is obtained from the crowd simulation algorithm. Our experimental results indicate that, by integrating simulation, the multi-person tracking performance such as MOTP and MOTA can be increased by an average about 2% and 5%, which provides significant evidence for the effectiveness of our approach.

Tracking individuals in video sequences, especially in crowded scenes, is still a challenging research topic in the area of pattern recognition and computer vision. However, current single camera tracking approaches are mostly based on visual features only. The novelty of the approach proposed in this paper is the integration of evidences from a crowd simulation algorithm into a pure vision based method. Based on a stateof-the-art tracking-by-detection method, the integration is achieved by evaluating particle weights with additional prediction of individual positions, which is obtained from the crowd simulation algorithm. Our experimental results indicate that, by integrating simulation, the multi-person tracking performance such as MOTP and MOTA can be increased by an average about 2% and 5%, which provides significant evidence for the effectiveness of our approach.

Integrating crowd simulation for pedestrian tracking in a multi-camera system

.png) Multi-camera multi-target tracking is one of the most active research topics in computer vision. However, many challenges remain to achieve robust performance in real-world video networks. In this paper we extend the state-of-the-art single camera tracking method, with both detection and crowd simulation, to a multiple camera tracking approach that exploits crowd simulation and uses principal axis-based integration. The experiments are conducted on PETS 2009 data set and the performance is evaluated by multiple object tracking precision and accuracy (MOTP and MOTA) based on the position of each pedestrian on the ground plane. It is demonstrated that the information from crowd simulation can provide significant advantage for tracking multiple pedestrians through multiple cameras.

Multi-camera multi-target tracking is one of the most active research topics in computer vision. However, many challenges remain to achieve robust performance in real-world video networks. In this paper we extend the state-of-the-art single camera tracking method, with both detection and crowd simulation, to a multiple camera tracking approach that exploits crowd simulation and uses principal axis-based integration. The experiments are conducted on PETS 2009 data set and the performance is evaluated by multiple object tracking precision and accuracy (MOTP and MOTA) based on the position of each pedestrian on the ground plane. It is demonstrated that the information from crowd simulation can provide significant advantage for tracking multiple pedestrians through multiple cameras.

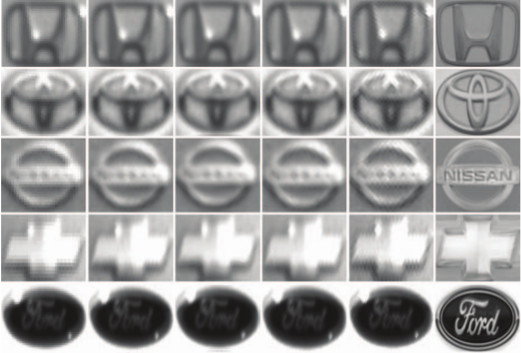

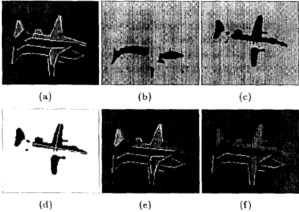

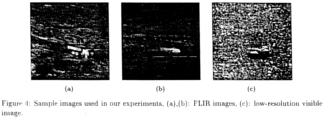

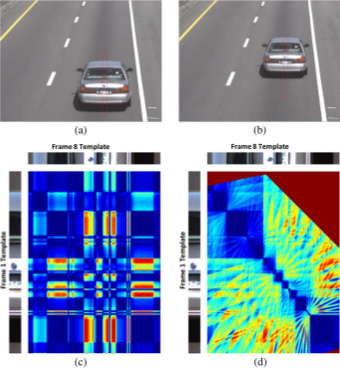

Vehicle Logo Super-Resolution by Canonical Correlation Analysis

Recognition of a vehicle make is of interest in the fields of

law enforcement and surveillance. We have develop

a canonical correlation analysis (CCA) based method for

vehicle logo super-resolution to facilitate the recognition of

the vehicle make. From a limited number of high-resolution

logos, we populate the training dataset for each make using

gamma transformations. Given a vehicle logo from a low resolution

source (i.e., surveillance or traffic camera recordings),

the learned models yield super-resolved results. By

matching the low-resolution image and the generated high resolution

images, we select the final output that is closest to

the low-resolution image in the histogram of oriented gradients

(HOG) feature space. Experimental results show that our

approach outperforms the state-of-the-art super-resolution

methods in qualitative and quantitative measures. Furthermore,

the super-resolved logos help to improve the accuracy

in the subsequent recognition tasks significantly.

Recognition of a vehicle make is of interest in the fields of

law enforcement and surveillance. We have develop

a canonical correlation analysis (CCA) based method for

vehicle logo super-resolution to facilitate the recognition of

the vehicle make. From a limited number of high-resolution

logos, we populate the training dataset for each make using

gamma transformations. Given a vehicle logo from a low resolution

source (i.e., surveillance or traffic camera recordings),

the learned models yield super-resolved results. By

matching the low-resolution image and the generated high resolution

images, we select the final output that is closest to

the low-resolution image in the histogram of oriented gradients

(HOG) feature space. Experimental results show that our

approach outperforms the state-of-the-art super-resolution

methods in qualitative and quantitative measures. Furthermore,

the super-resolved logos help to improve the accuracy

in the subsequent recognition tasks significantly.

Dynamic Bayesian Networks for Vehicle Classification in Video

Vehicle classification has evolved into a significant subject of study due to its importance in autonomous

navigation, traffic analysis, surveillance and security systems, and transportation management.

We present a

system which classifies a vehicle (given its direct rear-side view) into one of

four classes Sedan, Pickup truck, SUV/Minivan, and unknown. A feature set of tail light and vehicle

dimensions is extracted which feeds a feature selection algorithm.

A feature vector is then processed by a Hybrid Dynamic Bayesian Network (HDBN) to

classify each vehicle.

Vehicle classification has evolved into a significant subject of study due to its importance in autonomous

navigation, traffic analysis, surveillance and security systems, and transportation management.

We present a

system which classifies a vehicle (given its direct rear-side view) into one of

four classes Sedan, Pickup truck, SUV/Minivan, and unknown. A feature set of tail light and vehicle

dimensions is extracted which feeds a feature selection algorithm.

A feature vector is then processed by a Hybrid Dynamic Bayesian Network (HDBN) to

classify each vehicle.

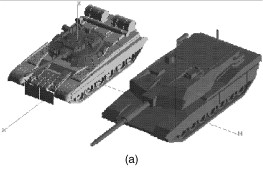

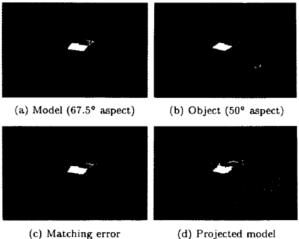

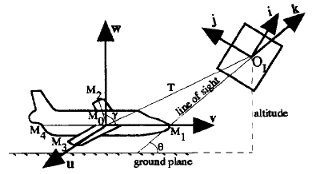

Incremental Unsupervised Three-Dimensional Vehicle Model Learning From Video

We introduce a new generic model-based approach for building 3-D models of vehicles

from color video from a single uncalibrated traffic-surveillance camera. We propose a novel directional

template method that uses trigonometric relations of the 2-D features and geometric relations of a single

3-D generic vehicle model to map 2-D features to 3-D in the face of projection and foreshortening effects.

Results are shown for several simulated and real traffic videos in an uncontrolled setup.

The performance of the proposed method for several types of

vehicles in two considerably different traffic spots is very promising to encourage its applicability in

3-D reconstruction of other rigid objects in video.

We introduce a new generic model-based approach for building 3-D models of vehicles

from color video from a single uncalibrated traffic-surveillance camera. We propose a novel directional

template method that uses trigonometric relations of the 2-D features and geometric relations of a single

3-D generic vehicle model to map 2-D features to 3-D in the face of projection and foreshortening effects.

Results are shown for several simulated and real traffic videos in an uncontrolled setup.

The performance of the proposed method for several types of

vehicles in two considerably different traffic spots is very promising to encourage its applicability in

3-D reconstruction of other rigid objects in video.

Bayesian Based 3D Shape Reconstruction from Video

In a video sequence with a 3D rigid object moving,

changing shapes of the 2D projections provide interrelated

spatio-temporal cues for incremental 3D shape

reconstruction. This research describes a probabilistic

approach for intelligent view-integration to build 3D model

of vehicles from traffic videos collected from an

uncalibrated static camera. The proposed Bayesian net

framework allows the handling of uncertainties in a

systematic manner. The performance is verified with several

types of vehicles in different videos.

In a video sequence with a 3D rigid object moving,

changing shapes of the 2D projections provide interrelated

spatio-temporal cues for incremental 3D shape

reconstruction. This research describes a probabilistic

approach for intelligent view-integration to build 3D model

of vehicles from traffic videos collected from an

uncalibrated static camera. The proposed Bayesian net

framework allows the handling of uncertainties in a

systematic manner. The performance is verified with several

types of vehicles in different videos.

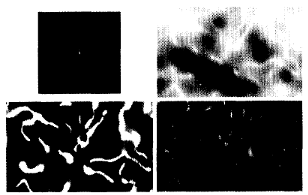

Automated classification of skippers based on parts representation

.png) Image data can help to understand species evolution from a new perspective. In this paper, we propose a parts-based (patch-based) representation for biological images. Experimental results show this compact model as efficient and effective for representing and classifying skipper images. The results can be further improved by exploiting symmetry of the shape and increasing the quality of image segmentation.

Image data can help to understand species evolution from a new perspective. In this paper, we propose a parts-based (patch-based) representation for biological images. Experimental results show this compact model as efficient and effective for representing and classifying skipper images. The results can be further improved by exploiting symmetry of the shape and increasing the quality of image segmentation.

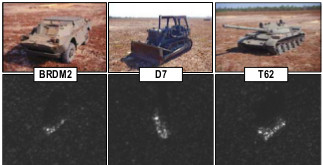

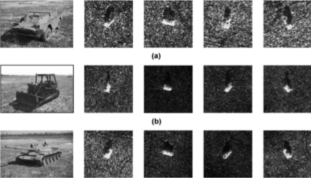

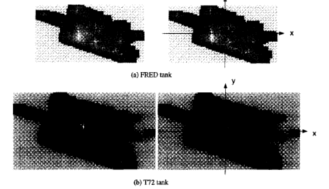

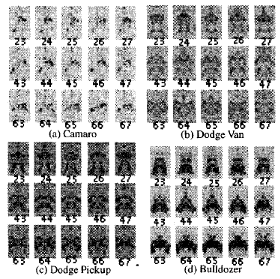

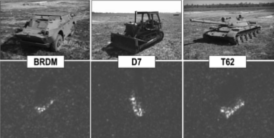

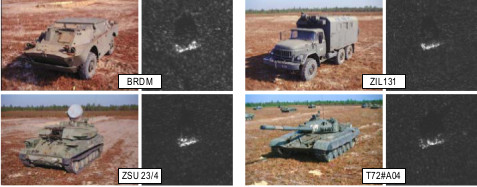

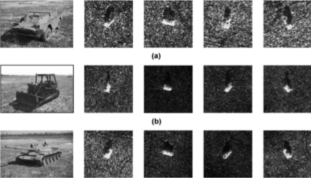

Visual Learning by Evolutionary and Coevolutionary Feature Synthesis

We present a novel method for learning complex concepts/hypotheses directly from raw

training data. The task addressed here concerns data-driven synthesis of recognition procedures for

real-world object recognition. The method uses linear genetic programming to encode potential

solutions expressed in terms of elementary operations, and handles the complexity of the learning

task by applying cooperative coevolution to decompose the problem automatically at the

genotype level. Extensive experimental results

show that the approach attains competitive performance for three-dimensional object recognition in real

synthetic aperture radar imagery.

We present a novel method for learning complex concepts/hypotheses directly from raw

training data. The task addressed here concerns data-driven synthesis of recognition procedures for

real-world object recognition. The method uses linear genetic programming to encode potential

solutions expressed in terms of elementary operations, and handles the complexity of the learning

task by applying cooperative coevolution to decompose the problem automatically at the

genotype level. Extensive experimental results

show that the approach attains competitive performance for three-dimensional object recognition in real

synthetic aperture radar imagery.

Coevolution and Linear Genetic Programming for Visual Learning

We introduce a novel genetically-inspired visual learning method. Given the training images,

this general approach induces a sophisticated feature-based recognition system, by using cooperative

coevolution and linear genetic programming for the procedural representation of feature extraction agents.

The paper describes the learning algorithm and provides a firm rationale for its design. An extensive

experimental evaluation, on the demanding real-world task of object recognition in synthetic aperture radar

(SAR) imagery, shows the competitiveness of the proposed approach with human-designed recognition

systems.

We introduce a novel genetically-inspired visual learning method. Given the training images,

this general approach induces a sophisticated feature-based recognition system, by using cooperative

coevolution and linear genetic programming for the procedural representation of feature extraction agents.

The paper describes the learning algorithm and provides a firm rationale for its design. An extensive

experimental evaluation, on the demanding real-world task of object recognition in synthetic aperture radar

(SAR) imagery, shows the competitiveness of the proposed approach with human-designed recognition

systems.

Visual Learning by Evolutionary Feature Synthesis

We present a novel method for learning complex concepts/hypotheses directly from raw

training data. The task addressed here concerns data-driven synthesis of recognition procedures for

real-world object recognition task. The method uses linear genetic programming to encode potential

solutions expressed in terms of elementary operations, and handles the complexity of the learning task

by applying cooperative coevolution to decompose the problem automatically. The training consists in

coevolving feature extraction procedures, each being a sequence of elementary image processing and

feature extraction operations. Extensive experimental results show that the approach attains competitive

performance for 3-D object recognition in real synthetic aperture radar (SAR) imagery.

We present a novel method for learning complex concepts/hypotheses directly from raw

training data. The task addressed here concerns data-driven synthesis of recognition procedures for

real-world object recognition task. The method uses linear genetic programming to encode potential

solutions expressed in terms of elementary operations, and handles the complexity of the learning task

by applying cooperative coevolution to decompose the problem automatically. The training consists in

coevolving feature extraction procedures, each being a sequence of elementary image processing and

feature extraction operations. Extensive experimental results show that the approach attains competitive

performance for 3-D object recognition in real synthetic aperture radar (SAR) imagery.

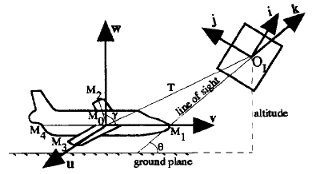

Incremental Vehicle 3-D Modeling from Video

We present a new model-based approach

for building 3-D models of vehicles from color video

provided by a traffic surveillance camera. We

incrementally build 3D models using a clustering

technique. Geometrical relations based on 3D generic

vehicle model map 2D features to 3D. The 3D features are

then adaptively clustered over the frame sequence to

incrementally generate the 3D model of the vehicle.

Results are shown for both simulated and real traffic video.

They are evaluated by a new structural performance

measure underscoring usefulness of incremental learning.

We present a new model-based approach

for building 3-D models of vehicles from color video

provided by a traffic surveillance camera. We

incrementally build 3D models using a clustering

technique. Geometrical relations based on 3D generic

vehicle model map 2D features to 3D. The 3D features are

then adaptively clustered over the frame sequence to

incrementally generate the 3D model of the vehicle.

Results are shown for both simulated and real traffic video.

They are evaluated by a new structural performance

measure underscoring usefulness of incremental learning.

Unsupervised Learning for Incremental 3-D Modeling

Learning based incremental 3D modeling of traffic

vehicles from uncalibrated video data stream has enormous

application potential in traffic monitoring and intelligent

transportation systems. In this research, video data from a

traffic surveillance camera is used to incrementally

develop the 3D model of vehicles using a clustering based

unsupervised learning. Geometrical relations based on 3D

generic vehicle model map 2D features to 3D. The 3D

features are then adaptively clustered over the frames to

incrementally generate the 3D model of the vehicle.

Results are shown for both simulated and real traffic video.

They are evaluated by a structural performance measure.

Learning based incremental 3D modeling of traffic

vehicles from uncalibrated video data stream has enormous

application potential in traffic monitoring and intelligent

transportation systems. In this research, video data from a

traffic surveillance camera is used to incrementally

develop the 3D model of vehicles using a clustering based

unsupervised learning. Geometrical relations based on 3D

generic vehicle model map 2D features to 3D. The 3D

features are then adaptively clustered over the frames to

incrementally generate the 3D model of the vehicle.

Results are shown for both simulated and real traffic video.

They are evaluated by a structural performance measure.

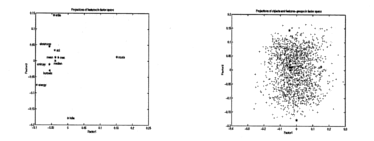

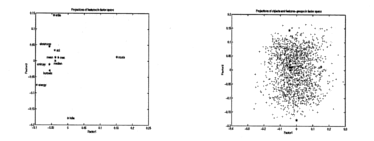

Learning models for predicting recognition performance

.png) This paper addresses one of the fundamental problems encountered in performance prediction for object recognition. In particular we address the problems related to estimation of small gallery size that can give good error estimates and their confidences on large probe sets and populations. We use a generalized two-dimensional prediction model that integrates a hypergeometric probability distribution model with a binomial model explicitly and considers the distortion problem in large populations. We incorporate learning in the prediction process in order to find the optimal small gallery size and to improve its performance. The Chernoff and Chebychev inequalities are used as a guide to obtain the small gallery size. During the prediction we use the expectation-maximum (EM) algorithm to learn the match score and the non-match score distributions (the number of components, their weights, means and covariances) that are represented as Gaussian mixtures. By learning we find the optimal size of small gallery and at the same time provide the upper bound and the lower bound for the prediction on large populations. Results are shown using real-world databases.

This paper addresses one of the fundamental problems encountered in performance prediction for object recognition. In particular we address the problems related to estimation of small gallery size that can give good error estimates and their confidences on large probe sets and populations. We use a generalized two-dimensional prediction model that integrates a hypergeometric probability distribution model with a binomial model explicitly and considers the distortion problem in large populations. We incorporate learning in the prediction process in order to find the optimal small gallery size and to improve its performance. The Chernoff and Chebychev inequalities are used as a guide to obtain the small gallery size. During the prediction we use the expectation-maximum (EM) algorithm to learn the match score and the non-match score distributions (the number of components, their weights, means and covariances) that are represented as Gaussian mixtures. By learning we find the optimal size of small gallery and at the same time provide the upper bound and the lower bound for the prediction on large populations. Results are shown using real-world databases.

Visual learning by coevolutionary feature synthesis

.png) In this paper, a novel genetically inspired visual learning method is proposed. Given the training raster images, this general approach induces a sophisticated feature-based recognition system. It employs the paradigm of cooperative coevolution to handle the computational difficulty of this task. To represent the feature extraction agents, the linear genetic programming is used. The paper describes the learning algorithm and provides a firm rationale for its design. Different architectures of recognition systems are considered that employ the proposed feature synthesis method. An extensive experimental evaluation on the demanding real-world task of object recognition in synthetic aperture radar (SAR) imagery shows the ability of the proposed approach to attain high recognition performance in different operating conditions.

In this paper, a novel genetically inspired visual learning method is proposed. Given the training raster images, this general approach induces a sophisticated feature-based recognition system. It employs the paradigm of cooperative coevolution to handle the computational difficulty of this task. To represent the feature extraction agents, the linear genetic programming is used. The paper describes the learning algorithm and provides a firm rationale for its design. Different architectures of recognition systems are considered that employ the proposed feature synthesis method. An extensive experimental evaluation on the demanding real-world task of object recognition in synthetic aperture radar (SAR) imagery shows the ability of the proposed approach to attain high recognition performance in different operating conditions.

Evolutionary Feature Synthesis for Object Recognition

We've developed a coevolutionary genetic

programming (CGP) approach to learn composite features for object recognition. The motivation for using CGP is to

overcome the limitations of human experts who consider only a small number of conventional

combinations of primitive features during synthesis. CGP, on the other hand, can try a very large number

of unconventional combinations and these unconventional combinations yield exceptionally good results

in some cases. The comparison with other classical classification algorithms

is favourable to the CGP-based approach we've proposed.

We've developed a coevolutionary genetic

programming (CGP) approach to learn composite features for object recognition. The motivation for using CGP is to

overcome the limitations of human experts who consider only a small number of conventional

combinations of primitive features during synthesis. CGP, on the other hand, can try a very large number

of unconventional combinations and these unconventional combinations yield exceptionally good results

in some cases. The comparison with other classical classification algorithms

is favourable to the CGP-based approach we've proposed.

Cooperative coevolution fusion for moving object detection

.png) In this paper we introduce a novel sensor fusion algorithm based on the cooperative coevolutionary paradigm. We develop a multisensor robust moving object detection system that can operate under a variety of illumination and environmental conditions. Our experiments indicate that this evolutionary paradigm is well suited as a sensor fusion model and can be extended to different sensing modalities.

In this paper we introduce a novel sensor fusion algorithm based on the cooperative coevolutionary paradigm. We develop a multisensor robust moving object detection system that can operate under a variety of illumination and environmental conditions. Our experiments indicate that this evolutionary paradigm is well suited as a sensor fusion model and can be extended to different sensing modalities.

Object detection in multimodal images using genetic programming

.png) In this paper, we learn to discover composite operators and features that are synthesized from combinations of primitive image processing operations for object detection. Our approach is based on genetic programming (GP). The motivation for using GP-based learning is that we hope to automate the design of object detection system by automatically synthesizing object detection procedures from primitive operations and primitive features. The human expert, limited by experience, knowledge and time, can only try a very small number of conventional combinations. Genetic programming, on the other hand, attempts many unconventional combinations that may never be imagined by human experts. In some cases, these unconventional combinations yield exceptionally good results.

In this paper, we learn to discover composite operators and features that are synthesized from combinations of primitive image processing operations for object detection. Our approach is based on genetic programming (GP). The motivation for using GP-based learning is that we hope to automate the design of object detection system by automatically synthesizing object detection procedures from primitive operations and primitive features. The human expert, limited by experience, knowledge and time, can only try a very small number of conventional combinations. Genetic programming, on the other hand, attempts many unconventional combinations that may never be imagined by human experts. In some cases, these unconventional combinations yield exceptionally good results.

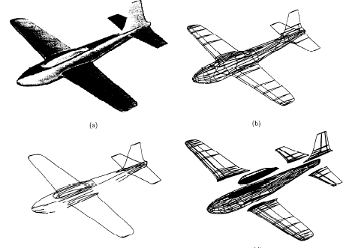

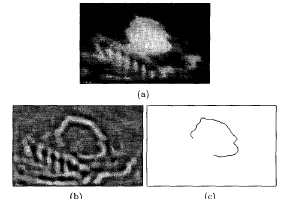

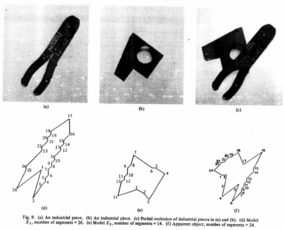

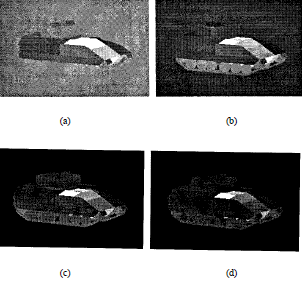

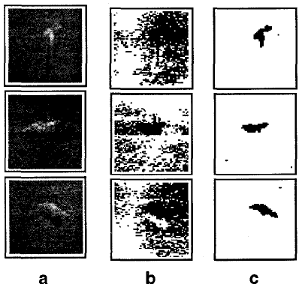

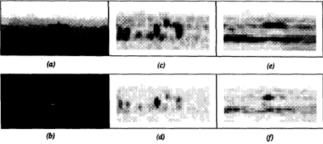

Physical models for moving shadow and object detection in video

.png) Current moving object detection systems typically detect shadows cast by the moving object as part of the moving object. In this paper, the problem of separating moving cast shadows from the moving objects in an outdoor environment is addressed. Unlike previous work, we present an approach that does not rely on any geometrical assumptions such as camera location and ground surface/object geometry. The approach is based on a new spatio-temporal albedo test and dichromatic reflection model and accounts for both the sun and the sky illuminations. Results are presented for several video sequences representing a variety of ground materials when the shadows are cast on different surface types. These results show that our approach is robust to widely different background and foreground materials, and illuminations.

Current moving object detection systems typically detect shadows cast by the moving object as part of the moving object. In this paper, the problem of separating moving cast shadows from the moving objects in an outdoor environment is addressed. Unlike previous work, we present an approach that does not rely on any geometrical assumptions such as camera location and ground surface/object geometry. The approach is based on a new spatio-temporal albedo test and dichromatic reflection model and accounts for both the sun and the sky illuminations. Results are presented for several video sequences representing a variety of ground materials when the shadows are cast on different surface types. These results show that our approach is robust to widely different background and foreground materials, and illuminations.

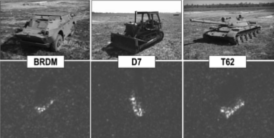

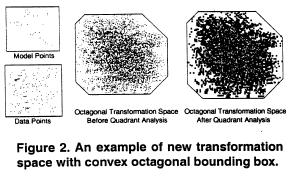

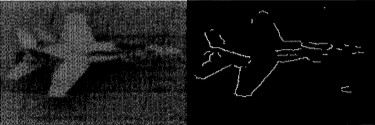

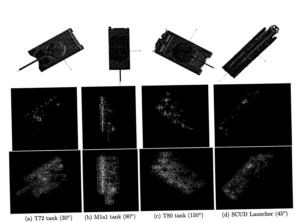

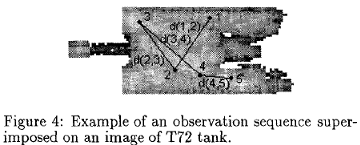

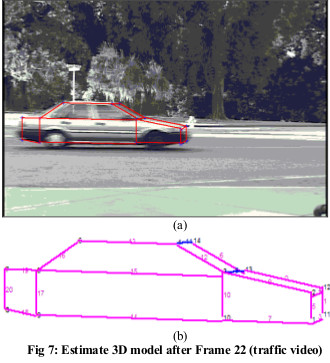

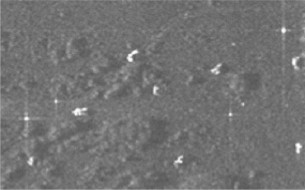

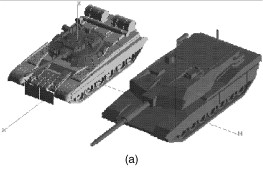

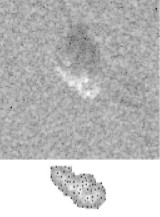

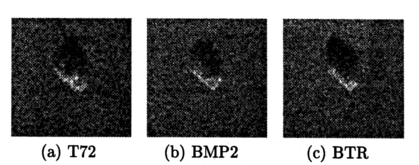

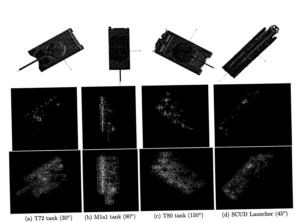

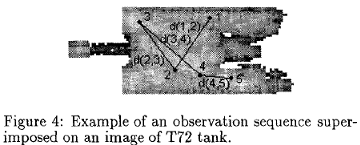

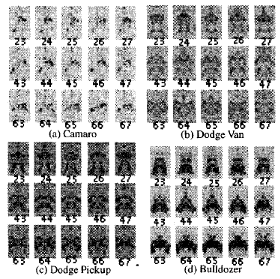

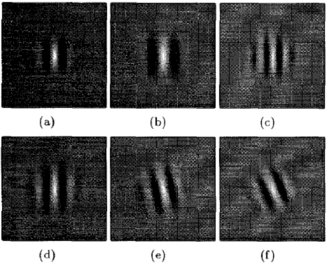

Multiple look angle SAR recognition

.png) The focus of this paper is optimizing the recognition of vehicles in Synthetic Aperture radar (SAR) imagery by exploiting the azimuthal variance of scatterers using multiple SAR recognizers at different look angles. The variance of SAR scattering center locations with target azimuth leads to recognition system results at different azimuths that are independent, even for small azimuth deltas. Extensive experimental recognition results are presented in terms of receiver operating characteristic (ROC) curves to show the effects of multiple look angles on recognition performance for MSTAR vehicle targets with configuration variants, articulation, and occlusion.

The focus of this paper is optimizing the recognition of vehicles in Synthetic Aperture radar (SAR) imagery by exploiting the azimuthal variance of scatterers using multiple SAR recognizers at different look angles. The variance of SAR scattering center locations with target azimuth leads to recognition system results at different azimuths that are independent, even for small azimuth deltas. Extensive experimental recognition results are presented in terms of receiver operating characteristic (ROC) curves to show the effects of multiple look angles on recognition performance for MSTAR vehicle targets with configuration variants, articulation, and occlusion.

Learning composite features for object recognition

.png) Features represent the characteristics of objects and selecting or synthesizing effective composite features are the key factors to the performance of object recognition. In this paper, we propose a co-evolutionary genetic programming (CGP) approach to learn composite features for object recognition. The motivation for using CGP is to overcome the limitations of human experts who consider only a small number of conventional combinations of primitive features during synthesis. On the other hand, CGP can try a very large number of unconventional combinations and these unconventional combinations may yield exceptionally good results in some cases. Our experimental results with real synthetic aperture radar (SAR) images show that CGP can learn good composite features. We show results to distinguish objects from clutter and to distinguish objects that belong to several classes.

Features represent the characteristics of objects and selecting or synthesizing effective composite features are the key factors to the performance of object recognition. In this paper, we propose a co-evolutionary genetic programming (CGP) approach to learn composite features for object recognition. The motivation for using CGP is to overcome the limitations of human experts who consider only a small number of conventional combinations of primitive features during synthesis. On the other hand, CGP can try a very large number of unconventional combinations and these unconventional combinations may yield exceptionally good results in some cases. Our experimental results with real synthetic aperture radar (SAR) images show that CGP can learn good composite features. We show results to distinguish objects from clutter and to distinguish objects that belong to several classes.

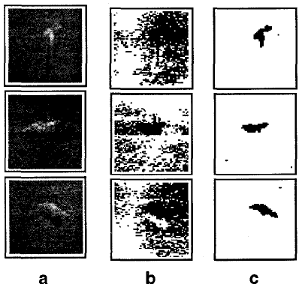

Stochastic Models for Recognition of Occluded Targets

Recognition of occluded objects in synthetic aperture radar (SAR) images is a significant problem for

automatic target recognition. Stochastic models provide some attractive features for pattern matching

and recognition under partial occlusion and noise. We present a hidden Markov modeling

based approach for recognizing objects in SAR images. We identify the peculiar characteristics of SAR

sensors and using these characteristics we develop feature based multiple models for a given SAR image

of an object. In order to improve

performance we integrate these models synergistically using their probabilistic estimates for recognition of

a particular target at a specific azimuth. Experimental results are presented using both synthetic

and real SAR images.

Recognition of occluded objects in synthetic aperture radar (SAR) images is a significant problem for

automatic target recognition. Stochastic models provide some attractive features for pattern matching

and recognition under partial occlusion and noise. We present a hidden Markov modeling

based approach for recognizing objects in SAR images. We identify the peculiar characteristics of SAR

sensors and using these characteristics we develop feature based multiple models for a given SAR image

of an object. In order to improve

performance we integrate these models synergistically using their probabilistic estimates for recognition of

a particular target at a specific azimuth. Experimental results are presented using both synthetic

and real SAR images.

Coevolutionary computation for synthesis of recognition systems

.png) This paper introduces a novel visual learning method that involves cooperative coevolution and linear genetic programming. Given exclusively training images, the evolutionary learning algorithm induces a set of sophisticated feature extraction agents represented in a procedural way. The proposed method incorporates only general vision-related background knowledge and does not require any task-specific information. The paper describes the learning algorithm, provides a firm rationale for its design, and proves its competitiveness with the human-designed recognition systems in an extensive experimental evaluation, on the demanding real-world task of object recognition in synthetic aperture radar (SAR) imagery.

This paper introduces a novel visual learning method that involves cooperative coevolution and linear genetic programming. Given exclusively training images, the evolutionary learning algorithm induces a set of sophisticated feature extraction agents represented in a procedural way. The proposed method incorporates only general vision-related background knowledge and does not require any task-specific information. The paper describes the learning algorithm, provides a firm rationale for its design, and proves its competitiveness with the human-designed recognition systems in an extensive experimental evaluation, on the demanding real-world task of object recognition in synthetic aperture radar (SAR) imagery.

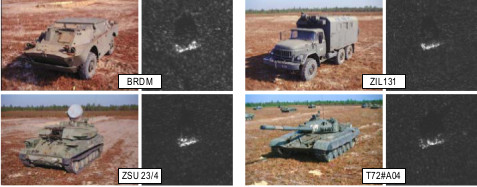

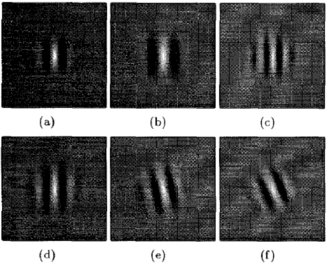

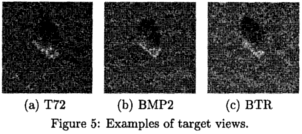

Coevolving feature extraction agents for target recognition in SAR images

.png) This paper describes a novel evolutionary method for automatic induction of target recognition procedures from examples. The learning process starts with training data containing SAR images with labeled targets and consists in coevolving the population of feature extraction agents that cooperate to build an appropriate representation of the input image. Features extracted by a team of cooperating agents are used to induce a machine learning classifier that is responsible for making the final decision of recognizing a target in a SAR image. Each agent (individual) contains feature extraction procedure encoded according to the principles of linear genetic programming (LGP). Like `plain" genetic programming, in LGP an agent"s genome encodes a program that is executed and tested on the set of training images during the fitness calculation. The program is a sequence of calls to the library of parameterized operations, including, but not limited to, global and local image processing operations, elementary feature extraction, and logic and arithmetic operations. Particular calls operate on working variables that enable the program to store intermediate results, and therefore design complex features. This paper contains detailed description of the learning and recognition methodology outlined here. In experimental part, we report and analyze the results obtained when testing the proposed approach for SAR target recognition using MSTAR database.

This paper describes a novel evolutionary method for automatic induction of target recognition procedures from examples. The learning process starts with training data containing SAR images with labeled targets and consists in coevolving the population of feature extraction agents that cooperate to build an appropriate representation of the input image. Features extracted by a team of cooperating agents are used to induce a machine learning classifier that is responsible for making the final decision of recognizing a target in a SAR image. Each agent (individual) contains feature extraction procedure encoded according to the principles of linear genetic programming (LGP). Like `plain" genetic programming, in LGP an agent"s genome encodes a program that is executed and tested on the set of training images during the fitness calculation. The program is a sequence of calls to the library of parameterized operations, including, but not limited to, global and local image processing operations, elementary feature extraction, and logic and arithmetic operations. Particular calls operate on working variables that enable the program to store intermediate results, and therefore design complex features. This paper contains detailed description of the learning and recognition methodology outlined here. In experimental part, we report and analyze the results obtained when testing the proposed approach for SAR target recognition using MSTAR database.

Composite class models for SAR recognition

.png) This paper focuses on a genetic algorithm based method that automates the construction of local feature based composite class models to capture the salient characteristics of configuration variants of vehicle targets in SAR imagery and increase the performance of SAR recognition systems. The recognition models are based on quasi-invariant local features: SAR scattering center locations and magnitudes. The approach uses an efficient SAR recognition system as an evaluation function to determine the fitness class models. Experimental results are given on the fitness of the composite models and the similarity of both the original training model configurations and the synthesized composite models to the test configurations. In addition, results are presented to show the SAR recognition variants of MSTAR vehicle targets.

This paper focuses on a genetic algorithm based method that automates the construction of local feature based composite class models to capture the salient characteristics of configuration variants of vehicle targets in SAR imagery and increase the performance of SAR recognition systems. The recognition models are based on quasi-invariant local features: SAR scattering center locations and magnitudes. The approach uses an efficient SAR recognition system as an evaluation function to determine the fitness class models. Experimental results are given on the fitness of the composite models and the similarity of both the original training model configurations and the synthesized composite models to the test configurations. In addition, results are presented to show the SAR recognition variants of MSTAR vehicle targets.

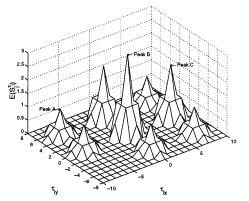

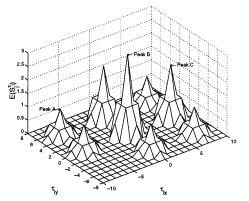

Performance modeling of vote-based object recognition

.png) The focus of this paper is predicting the bounds on performance of a vote-based object recognition system, when the test data features are distorted by uncertainty in both feature locations and magnitudes, by occlusion and by clutter. An improved method is presented to calculate lower and upper bound predictions of the probability that objects with various levels of distorted features will be recognized correctly. The prediction method takes model similarity into account, so that when models of objects are more similar to each other, then the probability of correct recognition is lower. The effectiveness of the prediction method is validated in a synthetic aperture radar (SAR) automatic target recognition (ATR) application using MSTAR public SAR data, which are obtained under different depression angles, object configurations and object articulations. Experiments show the performance improvement that can obtained by considering the feature magnitudes, compared to a previous performance prediction method that only considered the locations of features. In addition, the predicted performance is compared with actual performance of a vote-based SAR recognition system using the same SAR scatterer location and magnitude features.

The focus of this paper is predicting the bounds on performance of a vote-based object recognition system, when the test data features are distorted by uncertainty in both feature locations and magnitudes, by occlusion and by clutter. An improved method is presented to calculate lower and upper bound predictions of the probability that objects with various levels of distorted features will be recognized correctly. The prediction method takes model similarity into account, so that when models of objects are more similar to each other, then the probability of correct recognition is lower. The effectiveness of the prediction method is validated in a synthetic aperture radar (SAR) automatic target recognition (ATR) application using MSTAR public SAR data, which are obtained under different depression angles, object configurations and object articulations. Experiments show the performance improvement that can obtained by considering the feature magnitudes, compared to a previous performance prediction method that only considered the locations of features. In addition, the predicted performance is compared with actual performance of a vote-based SAR recognition system using the same SAR scatterer location and magnitude features.

\

Genetic Algorithm Based Feature Selection for Target Detection in SAR Images

A genetic algorithm (GA) approach is presented to select a set of features to discriminate the targets from

the natural clutter false alarms in SAR images. A new fitness function based on minimum

description length principle (MDLP) is proposed to drive GA and it is compared with three other fitness

functions. Experimental results show that the new fitness function outperforms the other three fitness

functions and the GA driven by it selected a good subset of features to discriminate the targets from

clutters effectively.

A genetic algorithm (GA) approach is presented to select a set of features to discriminate the targets from

the natural clutter false alarms in SAR images. A new fitness function based on minimum

description length principle (MDLP) is proposed to drive GA and it is compared with three other fitness

functions. Experimental results show that the new fitness function outperforms the other three fitness

functions and the GA driven by it selected a good subset of features to discriminate the targets from

clutters effectively.

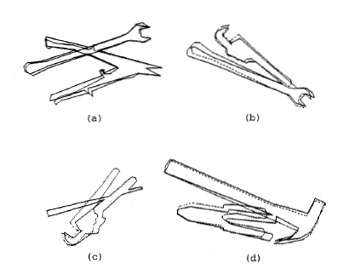

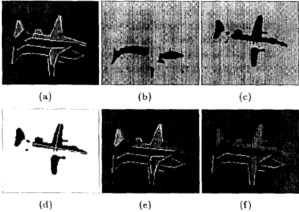

Discovering operators and features for object detection

.png) In this paper, we learn to discover composite operators and features that are evolved from combinations of primitive image processing operations to extract regions-of-interest (ROls) in images. Our approach is based on genetic programming (GP). The motivation for using GP is that there are a great many ways of combining these primitive operations and the human expert, limited by experience, knowledge and time. can only try a very small number of conventional ways of combination. Genetic programming, on the other hand, attempts many unconventional ways of combination that may never be imagined by human experts. In some cases, these unconventional combinations yield exceptionally good results. Our experimental results show that GP can find good composite operators to effectively extract the regions of interest in an image and the. learned composite operators can be applied to extract ROls in other similar images.

In this paper, we learn to discover composite operators and features that are evolved from combinations of primitive image processing operations to extract regions-of-interest (ROls) in images. Our approach is based on genetic programming (GP). The motivation for using GP is that there are a great many ways of combining these primitive operations and the human expert, limited by experience, knowledge and time. can only try a very small number of conventional ways of combination. Genetic programming, on the other hand, attempts many unconventional ways of combination that may never be imagined by human experts. In some cases, these unconventional combinations yield exceptionally good results. Our experimental results show that GP can find good composite operators to effectively extract the regions of interest in an image and the. learned composite operators can be applied to extract ROls in other similar images.

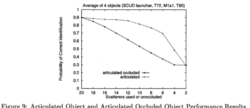

Exploiting azimuthal variance of scatterers for multiple look SAR recognition

.png) The focus of this paper is optimizing the recognition of vehicles in Synthetic Aperture Radar (SAR) imagery using multiple SAR recognizers at different look angles. The variance of SAR scattering center locations with target azimuth leads to recognition system results at different azimuths that are independent, even for small azimuth deltas. Extensive experimental recognition results are presented in terms of receiver operating characteristic (ROC) curves to show the effects of multiple look angles on recognition performance for MSTAR vehicle targets with configuration variants, articulation, and occlusion.

The focus of this paper is optimizing the recognition of vehicles in Synthetic Aperture Radar (SAR) imagery using multiple SAR recognizers at different look angles. The variance of SAR scattering center locations with target azimuth leads to recognition system results at different azimuths that are independent, even for small azimuth deltas. Extensive experimental recognition results are presented in terms of receiver operating characteristic (ROC) curves to show the effects of multiple look angles on recognition performance for MSTAR vehicle targets with configuration variants, articulation, and occlusion.

Increasing the Discrimination of Synthetic Aperture Radar Recognition Models

The focus of this work is optimizing recognition models for synthetic aperture radar (SAR)

signatures of vehicles to improve the performance of a recognition algorithm under the extended

operating conditions of target articulation, occlusion, and configuration variants. The approach

determines the similarities and differences among the various vehicle models. Methods to penalize similar

features or reward dissimilar features are used to increase the distinguishability of the recognition model

instances.

The focus of this work is optimizing recognition models for synthetic aperture radar (SAR)

signatures of vehicles to improve the performance of a recognition algorithm under the extended

operating conditions of target articulation, occlusion, and configuration variants. The approach

determines the similarities and differences among the various vehicle models. Methods to penalize similar

features or reward dissimilar features are used to increase the distinguishability of the recognition model

instances.

Feature selection for target detection in SAR images

.png) A genetic algorithm (GA) approach is presented to select a set of features to discriminate the targets from the natural clutter false alarms in SAR images. Four stages of an automatic target detection system are developed: the rough target detection, feature extraction from the potential target regions, GA based feature selection and the final Bayesian classification. Experimental results show that the GA selected a good subset of features that gave similar performance to using all the features.

A genetic algorithm (GA) approach is presented to select a set of features to discriminate the targets from the natural clutter false alarms in SAR images. Four stages of an automatic target detection system are developed: the rough target detection, feature extraction from the potential target regions, GA based feature selection and the final Bayesian classification. Experimental results show that the GA selected a good subset of features that gave similar performance to using all the features.

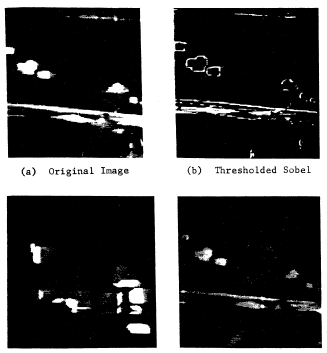

Multistrategy fusion using mixture model for moving object detection

.png) In a video surveillance domain, mixture models are used in conjunction with a variety of features and filters to detect and track moving objects. However, these systems do not provide clear performance results at the pixel detection level. In this paper, we apply the mixture model to provide several fusion strategies based on the competitive and cooperative principles of integration which we call OR, and AND strategies. In addition, we apply the Dempster-Shafer method to mixture models for object detection. Using two video databases, we show the performance of each fusion strategy using receiver operating characteristic (ROC) curves.

In a video surveillance domain, mixture models are used in conjunction with a variety of features and filters to detect and track moving objects. However, these systems do not provide clear performance results at the pixel detection level. In this paper, we apply the mixture model to provide several fusion strategies based on the competitive and cooperative principles of integration which we call OR, and AND strategies. In addition, we apply the Dempster-Shafer method to mixture models for object detection. Using two video databases, we show the performance of each fusion strategy using receiver operating characteristic (ROC) curves.

Predicting an Upper Bound on SAR ATR Performance

We present a method for predicting a tight upper bound on performance of a vote-based approach for

automatic target recognition (ATR) in synthetic aperture radar (SAR) images. The proposed method

considers data distortion factors such as uncertainty, occlusion,

and clutter, as well as model factors such as structural similarity. The proposed method is validated using

MSTAR public SAR data, which are obtained

under different depression angles, configurations, and articulations.

We present a method for predicting a tight upper bound on performance of a vote-based approach for

automatic target recognition (ATR) in synthetic aperture radar (SAR) images. The proposed method

considers data distortion factors such as uncertainty, occlusion,

and clutter, as well as model factors such as structural similarity. The proposed method is validated using

MSTAR public SAR data, which are obtained

under different depression angles, configurations, and articulations.

Recognizing Occluded Objects in SAR Images

Recognition

algorithms, based on local features, are presented that successfully recognize highly occluded objects in both

XPATCH synthetic SAR signatures and real SAR images of actual vehicles from the MSTAR data. Extensive

experimental results are presented for a basic recognition algorithm and for an improved algorithm.

The results show the effect of occlusion on

recognition performance in terms of probability of correct identification (PCI), receiver operating characteristic

(ROC) curves, and confusion matrices.

Recognition

algorithms, based on local features, are presented that successfully recognize highly occluded objects in both

XPATCH synthetic SAR signatures and real SAR images of actual vehicles from the MSTAR data. Extensive

experimental results are presented for a basic recognition algorithm and for an improved algorithm.

The results show the effect of occlusion on

recognition performance in terms of probability of correct identification (PCI), receiver operating characteristic

(ROC) curves, and confusion matrices.

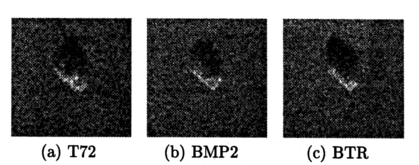

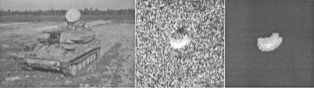

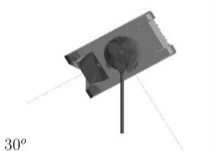

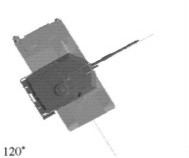

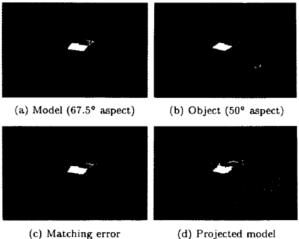

Recognizing target variants and articulations in synthetic aperture radar images

.png) The focus of this paper is recognizing articulated vehicles and actual vehicle configuration variants in real synthetic aperture radar (SAR) images. Using SAR scattering-center locations and magnitudes as features, the invariance of these features is shown with articulation (e.g., rotation of a tank turret), with configuration variants, and with a small change in depression angle. This scatterer-location and magnitude quasiinvariance is used as a basis for development of a SAR recognition system that successfully identifies real articulated and nonstandard- configuration vehicles based on nonarticulated, standard recognition models. Identification performance results are presented as vote-space scatterplots and receiver operating characteristic curves for configuration variants, for articulated objects, and for a small change in depression angle with the MSTAR public data.

The focus of this paper is recognizing articulated vehicles and actual vehicle configuration variants in real synthetic aperture radar (SAR) images. Using SAR scattering-center locations and magnitudes as features, the invariance of these features is shown with articulation (e.g., rotation of a tank turret), with configuration variants, and with a small change in depression angle. This scatterer-location and magnitude quasiinvariance is used as a basis for development of a SAR recognition system that successfully identifies real articulated and nonstandard- configuration vehicles based on nonarticulated, standard recognition models. Identification performance results are presented as vote-space scatterplots and receiver operating characteristic curves for configuration variants, for articulated objects, and for a small change in depression angle with the MSTAR public data.

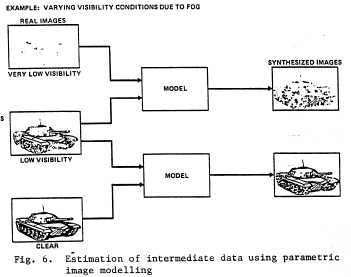

Adaptive target recognition

.png) Target recognition is a multilevel process requiring a sequence of algorithms at low, intermediate and high levels. Generally, such systems are open loop with no feedback between levels and assuring their performance at the given probability of correct identification (PCI) and probability of false alarm (P f) is a key challenge in computer vision and pattern recognition research. In this paper, a robust closed-loop system for recognition of SAR images based on reinforcement learning is presented. The parameters in model-based SAR target recognition are learned. It has been experimentally validated by learning the parameters of the recognition system for SAR imagery, successfully recognizing articulated targets, targets of different configuration and targets at different depression angles.

Target recognition is a multilevel process requiring a sequence of algorithms at low, intermediate and high levels. Generally, such systems are open loop with no feedback between levels and assuring their performance at the given probability of correct identification (PCI) and probability of false alarm (P f) is a key challenge in computer vision and pattern recognition research. In this paper, a robust closed-loop system for recognition of SAR images based on reinforcement learning is presented. The parameters in model-based SAR target recognition are learned. It has been experimentally validated by learning the parameters of the recognition system for SAR imagery, successfully recognizing articulated targets, targets of different configuration and targets at different depression angles.

Predicting Performance of Object Recognition

We present a method for predicting fundamental performance of object recognition.

The proposed method considers data distortion factors such as uncertainty,

occlusion, and clutter, in addition to model similarity. This is unlike previous approaches, which consider

only a subset of these factors. Performance is predicted in two stages. In the first stage, the similarity between