|

|

Ev ve Ofis taşıma sektöründe lider olmak.Teknolojiyi klrd takip ederek bunu müşteri menuniyeti amacı için kullanmak.Sektörde marka olmak.

İstanbul evden eve nakliyat

Misyonumuz sayesinde edindiğimiz müşteri memnuniyeti ve güven ile müşterilerimizin bizi tavsiye etmelerini sağlamak.

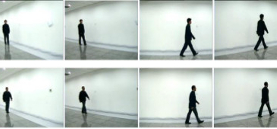

Age Classification Based on Gait Using HMM

We have proposed a new framework for age

classification based on human gait using the Hidden

Markov Model (HMM). To extract

appropriate gait features, we consider a contour

related method in terms of shape variations during

human walking. Then the image feature is transformed

to a lower-dimensional space by using the Frame to

Exemplar (FED) distance. A HMM is trained on the

FED vector sequences. Thus, the framework provides

flexibility in the selection of gait feature representation.

In addition, the framework is robust for classification

due to the statistical nature of HMM. The experimental

results show that video-based automatic age

classification from human gait is feasible and reliable.

We have proposed a new framework for age

classification based on human gait using the Hidden

Markov Model (HMM). To extract

appropriate gait features, we consider a contour

related method in terms of shape variations during

human walking. Then the image feature is transformed

to a lower-dimensional space by using the Frame to

Exemplar (FED) distance. A HMM is trained on the

FED vector sequences. Thus, the framework provides

flexibility in the selection of gait feature representation.

In addition, the framework is robust for classification

due to the statistical nature of HMM. The experimental

results show that video-based automatic age

classification from human gait is feasible and reliable.

Ethnicity Classification Based on Gait Using Multi-view Fusion

The determination of ethnicity of an individual, as a soft

biometrics, can be very useful in a video-based surveillance

system. Currently, face is commonly used to determine the

ethnicity of a person. Up to now, gait has been used for

individual recognition and gender classification but not for

ethnicity determination. Gait Energy

Image (GEI) is used in this research to analyze the recognition power

of gait for ethnicity. Feature fusion, score fusion and decision

fusion from multiple views of gait are explored. For

the feature fusion, GEI images and camera views are put

together to render a third-order tensor (x; y; view). A multilinear

principal component analysis (MPCA) is used to extract

features from tensor objects which integrate all views.

For the score fusion, the similarity scores measured from

single views are combined with a weighted SUM rule. For

the decision fusion, ethnicity classification is realized on

each individual view first. The classification results are then

combined to make the final determination with a majority

vote rule. A database of 36 walking people (East Asian

and South American) was acquired from 7 different camera

views. The experimental results show that ethnicity can

be determined from human gait in video automatically. The

classification rate is improved by fusing multiple camera

views and a comparison among different fusion schemes

shows that the MPCA based feature fusion performs the

best.

The determination of ethnicity of an individual, as a soft

biometrics, can be very useful in a video-based surveillance

system. Currently, face is commonly used to determine the

ethnicity of a person. Up to now, gait has been used for

individual recognition and gender classification but not for

ethnicity determination. Gait Energy

Image (GEI) is used in this research to analyze the recognition power

of gait for ethnicity. Feature fusion, score fusion and decision

fusion from multiple views of gait are explored. For

the feature fusion, GEI images and camera views are put

together to render a third-order tensor (x; y; view). A multilinear

principal component analysis (MPCA) is used to extract

features from tensor objects which integrate all views.

For the score fusion, the similarity scores measured from

single views are combined with a weighted SUM rule. For

the decision fusion, ethnicity classification is realized on

each individual view first. The classification results are then

combined to make the final determination with a majority

vote rule. A database of 36 walking people (East Asian

and South American) was acquired from 7 different camera

views. The experimental results show that ethnicity can

be determined from human gait in video automatically. The

classification rate is improved by fusing multiple camera

views and a comparison among different fusion schemes

shows that the MPCA based feature fusion performs the

best.

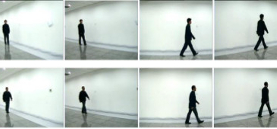

Human recognition in a video network

.png) Video networks is an emerging interdisciplinary field with significant and exciting scientific and technological challenges. It has great promise in solving many real-world problems and enabling a broad range of applications, including smart homes, video surveillance, environment and traffic monitoring, elderly care, intelligent environments, and entertainment in public and private spaces. This paper provides an overview of the design of a wireless video network as an experimental environment, camera selection, hand-off and control, anomaly detection. It addresses challenging questions for individual identification using gait and face at a distance and present new techniques and their comparison for robust identification.

Video networks is an emerging interdisciplinary field with significant and exciting scientific and technological challenges. It has great promise in solving many real-world problems and enabling a broad range of applications, including smart homes, video surveillance, environment and traffic monitoring, elderly care, intelligent environments, and entertainment in public and private spaces. This paper provides an overview of the design of a wireless video network as an experimental environment, camera selection, hand-off and control, anomaly detection. It addresses challenging questions for individual identification using gait and face at a distance and present new techniques and their comparison for robust identification.

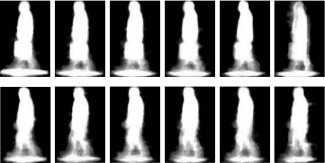

Recognition of Walking Humans in 3D: Initial Result

It has been challenging to recognize walking humans

at arbitrary poses from a single or small number of video

cameras. Attempts have been made mostly using a 2D

image/silhouette-based representation and a limited use of

3D kinematic model-based approaches. Unlike all the previous work in computer vision

and pattern recognition, the models of walking humans

are built using the sensed 3D range data at selected poses

without any markers. An instance of a walking individual

at a different pose is recognized using the 3D range data of

that pose. Both modeling and recognition of an individual

are done using the dense 3D range data. The proposed approach

first measures 3D human body data that consists of

the representative poses during a gait cycle. Next, a 3D human

body model is fitted to the body data using an approach

that overcomes the inherent gaps in the data and estimates

the body pose with high accuracy. A gait sequence is synthesized

by interpolation of joint positions and their movements

from the fitted body models. Both dynamic and static

gait features are obtained which are used to define a similarity

measure for an individual recognition in the database.

The experimental results show high recognition rates using

our range based 3D gait database.

It has been challenging to recognize walking humans

at arbitrary poses from a single or small number of video

cameras. Attempts have been made mostly using a 2D

image/silhouette-based representation and a limited use of

3D kinematic model-based approaches. Unlike all the previous work in computer vision

and pattern recognition, the models of walking humans

are built using the sensed 3D range data at selected poses

without any markers. An instance of a walking individual

at a different pose is recognized using the 3D range data of

that pose. Both modeling and recognition of an individual

are done using the dense 3D range data. The proposed approach

first measures 3D human body data that consists of

the representative poses during a gait cycle. Next, a 3D human

body model is fitted to the body data using an approach

that overcomes the inherent gaps in the data and estimates

the body pose with high accuracy. A gait sequence is synthesized

by interpolation of joint positions and their movements

from the fitted body models. Both dynamic and static

gait features are obtained which are used to define a similarity

measure for an individual recognition in the database.

The experimental results show high recognition rates using

our range based 3D gait database.

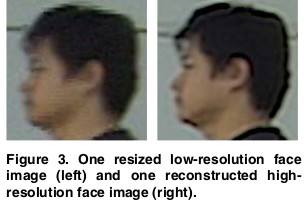

Human Recognition at a Distance

.png) This paper consider face, side face, gait and ear and their possible fusion for human recognition. It presents an overview of some of the techniques that we have developed for (a) super-resoulution-based face recognition in video, (b) gait-based recognition in video, (c) fusion of super-resolved side face and gait in video, (d) ear recognition in color/range images, and (e) fusion performance prediction and validation. It presents various real-world examples to illustrate the ideas and points out the relative merits of the approaches that are discussed.

This paper consider face, side face, gait and ear and their possible fusion for human recognition. It presents an overview of some of the techniques that we have developed for (a) super-resoulution-based face recognition in video, (b) gait-based recognition in video, (c) fusion of super-resolved side face and gait in video, (d) ear recognition in color/range images, and (e) fusion performance prediction and validation. It presents various real-world examples to illustrate the ideas and points out the relative merits of the approaches that are discussed.

Feature fusion of side face and gait for video-based human identification

Video-based human recognition at a distance remains a challenging problem for the fusion of multimodal

biometrics. We present a

new approach that utilizes and integrates information from side face and gait at the feature level. The features of

face and gait are obtained separately using principal component analysis (PCA) from enhanced side face image

(ESFI) and gait energy image (GEI), respectively. Multiple discriminant analysis (MDA) is employed on the

concatenated features of face and gait to obtain discriminating synthetic features. The experimental results demonstrate that the synthetic features, encoding both side face and gait

information, carry more discriminating power than the individual biometrics features, and the proposed feature

level fusion scheme outperforms the match score level and another feature level fusion scheme.

Video-based human recognition at a distance remains a challenging problem for the fusion of multimodal

biometrics. We present a

new approach that utilizes and integrates information from side face and gait at the feature level. The features of

face and gait are obtained separately using principal component analysis (PCA) from enhanced side face image

(ESFI) and gait energy image (GEI), respectively. Multiple discriminant analysis (MDA) is employed on the

concatenated features of face and gait to obtain discriminating synthetic features. The experimental results demonstrate that the synthetic features, encoding both side face and gait

information, carry more discriminating power than the individual biometrics features, and the proposed feature

level fusion scheme outperforms the match score level and another feature level fusion scheme.

Integrating Face and Gait for Human Recognition at a Distance in Video

We have introduced a new video-based recognition method to recognize noncooperating individuals at a

distance in video who expose side views to the camera. Information from two biometrics sources, side face

and gait, is utilized and integrated for recognition. For side face, an enhanced side-face image (ESFI), a

higher resolution image compared with the image directly obtained from a single video frame, is constructed.

For gait, the gait energy image (GEI),

a spatiotemporal compact representation of gait in video, is used to characterize human-walking properties.

The experimental results show that the idea of constructing

ESFI from multiple frames is promising for human recognition in video, and better face features are extracted

from ESFI compared to those from the original side-face images (OSFIs).

We have introduced a new video-based recognition method to recognize noncooperating individuals at a

distance in video who expose side views to the camera. Information from two biometrics sources, side face

and gait, is utilized and integrated for recognition. For side face, an enhanced side-face image (ESFI), a

higher resolution image compared with the image directly obtained from a single video frame, is constructed.

For gait, the gait energy image (GEI),

a spatiotemporal compact representation of gait in video, is used to characterize human-walking properties.

The experimental results show that the idea of constructing

ESFI from multiple frames is promising for human recognition in video, and better face features are extracted

from ESFI compared to those from the original side-face images (OSFIs).

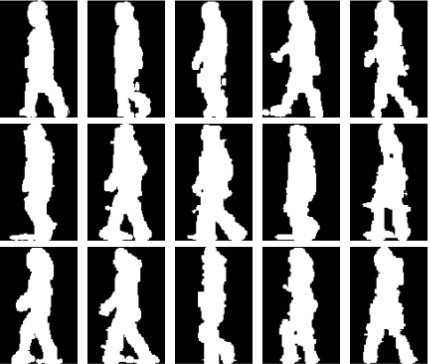

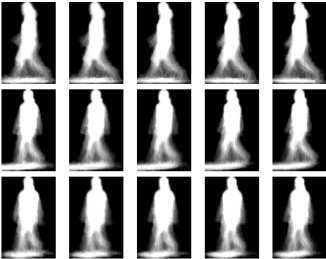

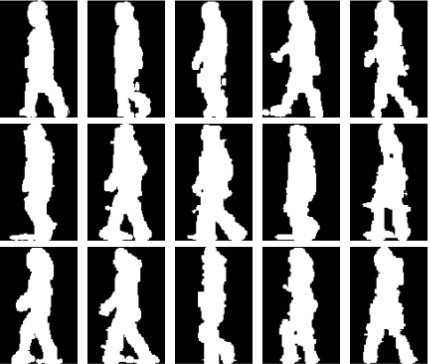

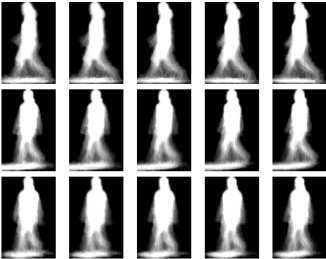

Individual Recognition Using Gait Energy Image

We propose a new spatio-temporal gait representation,

called Gait Energy Image (GEI), to characterize

human walking properties for individual recognition by

gait. To address the problem of the lack of training templates,

we generate a series of new GEI templates by analyzing

the human silhouette distortion under various conditions.

Principal component analysis followed by multiple

discriminant analysis are used for learning features from

the expanded GEI training templates. Recognition is carried

out based on the learned features. Experimental results

show that the proposed GEI is an effective and efficient gait

representation for individual recognition, and the proposed

approach achieves highly competitive performance with respect

to current gait recognition approaches.

We propose a new spatio-temporal gait representation,

called Gait Energy Image (GEI), to characterize

human walking properties for individual recognition by

gait. To address the problem of the lack of training templates,

we generate a series of new GEI templates by analyzing

the human silhouette distortion under various conditions.

Principal component analysis followed by multiple

discriminant analysis are used for learning features from

the expanded GEI training templates. Recognition is carried

out based on the learned features. Experimental results

show that the proposed GEI is an effective and efficient gait

representation for individual recognition, and the proposed

approach achieves highly competitive performance with respect

to current gait recognition approaches.

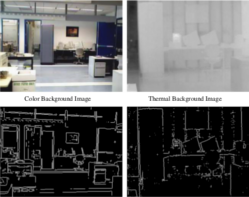

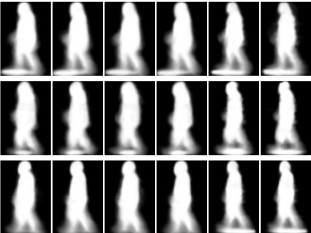

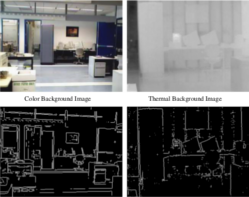

Fusion of Color and Infrared Video for Moving Human Detection

Image registration between color and thermal images is a challenging

problem due to the difficulties associated with finding correspondence. However, the moving people in a

static scene provide cues to address this problem. We propose a hierarchical scheme to

automatically find the correspondence between the preliminary human silhouettes extracted from synchronous

color and thermal image sequences for image registration. It is shown

that the proposed approach achieves good results for image registration and human silhouette extraction.

Experimental results also show a comparison of various sensor fusion strategies and demonstrate the

improvement in performance over non-fused cases for human silhouette extraction.

Image registration between color and thermal images is a challenging

problem due to the difficulties associated with finding correspondence. However, the moving people in a

static scene provide cues to address this problem. We propose a hierarchical scheme to

automatically find the correspondence between the preliminary human silhouettes extracted from synchronous

color and thermal image sequences for image registration. It is shown

that the proposed approach achieves good results for image registration and human silhouette extraction.

Experimental results also show a comparison of various sensor fusion strategies and demonstrate the

improvement in performance over non-fused cases for human silhouette extraction.

Feature Fusion of Face and Gait for Human Recognition at a Distance in Video

A new video based recognition method is presented

to recognize non-cooperating individuals at a distance in

video, who expose side views to the camera. Information

from two biometric sources, side face and gait, is utilized

and integrated at feature level. For face, a high-resolution

side face image is constructed from multiple video frames.

For gait, Gait Energy Image (GEI), a spatio-temporal compact

representation of gait in video, is used to characterize

human walking properties. Face features and gait features

are obtained separately using Principal Component Analysis

(PCA) and Multiple Discriminant Analysis (MDA) combined

method from the high-resolution side face image and

Gait Energy Image (GEI), respectively. The system is tested

on a database of video sequences corresponding to 46 people.

The results showed that the integrated face and gait

features carry the most discriminating power compared to

any individual biometric.

A new video based recognition method is presented

to recognize non-cooperating individuals at a distance in

video, who expose side views to the camera. Information

from two biometric sources, side face and gait, is utilized

and integrated at feature level. For face, a high-resolution

side face image is constructed from multiple video frames.

For gait, Gait Energy Image (GEI), a spatio-temporal compact

representation of gait in video, is used to characterize

human walking properties. Face features and gait features

are obtained separately using Principal Component Analysis

(PCA) and Multiple Discriminant Analysis (MDA) combined

method from the high-resolution side face image and

Gait Energy Image (GEI), respectively. The system is tested

on a database of video sequences corresponding to 46 people.

The results showed that the integrated face and gait

features carry the most discriminating power compared to

any individual biometric.

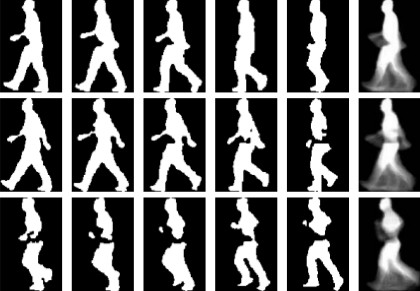

Human Activity Classification Based on Gait Energy Image and Coevolutionary Genetic Programming

We present a novel approach based on

gait energy image (GEI) and co-evolutionary genetic

programming (CGP) for human activity classification.

Specifically, Hu’s moment and normalized histogram

bins are extracted from the original GEIs as input

features. CGP is employed to reduce the feature

dimensionality and learn the classifiers. The strategy

of majority voting is applied to the CGP to improve the

overall performance in consideration of the

diversification of genetic programming. This learning based

approach improves the classification accuracy

by approximately 7 percent in comparison to the

traditional classifiers.

We present a novel approach based on

gait energy image (GEI) and co-evolutionary genetic

programming (CGP) for human activity classification.

Specifically, Hu’s moment and normalized histogram

bins are extracted from the original GEIs as input

features. CGP is employed to reduce the feature

dimensionality and learn the classifiers. The strategy

of majority voting is applied to the CGP to improve the

overall performance in consideration of the

diversification of genetic programming. This learning based

approach improves the classification accuracy

by approximately 7 percent in comparison to the

traditional classifiers.

Integrating Face and Gait for Human Recognition

We introduce a new video based recognition

method to recognize non-cooperating individuals at a distance

in video, who expose side views to the camera. Information

from two biometric sources, side face and gait,

is utilized and integrated for recognition. For side face, we

construct Enhanced Side Face Image (ESFI), a higher resolution

image compared with the image directly obtained

from a single video frame, to fuse information of face from

multiple video frames. For gait, we use Gait Energy Image

(GEI), a spatio-temporal compact representation of gait in

video, to characterize human walking properties. The features

of face and the features of gait are obtained separately

using Principal Component Analysis (PCA) and Multiple

Discriminant Analysis (MDA) combined method from ESFI

and GEI, respectively. They are then integrated at match

score level. Our approach is tested on a database of video

sequences corresponding to 46 people. The different fusion

methods are compared and analyzed. The experimental results

show that (a) Integrated information from side face

and gait is effective for human recognition in video; (b) The

idea of constructing ESFI from multiple frames is promising

for human recognition in video and better face features are

extracted from ESFI compared to those from original face

images.

We introduce a new video based recognition

method to recognize non-cooperating individuals at a distance

in video, who expose side views to the camera. Information

from two biometric sources, side face and gait,

is utilized and integrated for recognition. For side face, we

construct Enhanced Side Face Image (ESFI), a higher resolution

image compared with the image directly obtained

from a single video frame, to fuse information of face from

multiple video frames. For gait, we use Gait Energy Image

(GEI), a spatio-temporal compact representation of gait in

video, to characterize human walking properties. The features

of face and the features of gait are obtained separately

using Principal Component Analysis (PCA) and Multiple

Discriminant Analysis (MDA) combined method from ESFI

and GEI, respectively. They are then integrated at match

score level. Our approach is tested on a database of video

sequences corresponding to 46 people. The different fusion

methods are compared and analyzed. The experimental results

show that (a) Integrated information from side face

and gait is effective for human recognition in video; (b) The

idea of constructing ESFI from multiple frames is promising

for human recognition in video and better face features are

extracted from ESFI compared to those from original face

images.

A Study on View-insensitive Gait Recognition

Most gait recognition approaches only study human walking

fronto parallel to the image plane which is not realistic

in video surveillance applications. Human gait appearance

depends on various factors including locations of the camera

and the person, the camera axis and the walking direction.

By analyzing these factors, we propose a statistical

approach for view-insensitive gait recognition. The proposed

approach recognizes human using a single camera,

and avoids the difficulties of recovering the human body

structure and camera calibration. Experimental results show

that the proposed approach achieves good performance in

recognizing individuals walking along different directions.

Most gait recognition approaches only study human walking

fronto parallel to the image plane which is not realistic

in video surveillance applications. Human gait appearance

depends on various factors including locations of the camera

and the person, the camera axis and the walking direction.

By analyzing these factors, we propose a statistical

approach for view-insensitive gait recognition. The proposed

approach recognizes human using a single camera,

and avoids the difficulties of recovering the human body

structure and camera calibration. Experimental results show

that the proposed approach achieves good performance in

recognizing individuals walking along different directions.

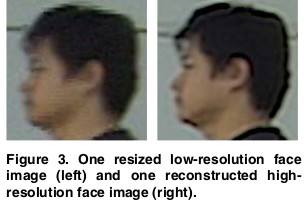

Human recognition at a distance in video by integrating face profile and gait

.png) Human recognition from arbitrary views is an important task for many applications, such as visual surveillance, covert security and access control. It has been found to be very difficult in reality, especially when a person is walking at a distance in read-world outdoor conditions. For optimal performance, the system should use as much information as possible from the observations. In this paper, we propose an innovative system, which combines cues of face profile and gait silhouette from the single camera video sequences. For optimal face profile recognition, we first reconstruct a high-resolution face profile image from several adjacent low-resolution video frames. Then we use a curvature-based matching method for recognition. For gait, we use Gait Energy Image (GEI) to characterize human walking properties. Recognition is carried out based on the direct GEI matching. Several schemes are considered for fusion of face profile and gait. A number of dynamic video sequences are tested to evaluate the performance of our system. Experiment results are compared and discussed.

Human recognition from arbitrary views is an important task for many applications, such as visual surveillance, covert security and access control. It has been found to be very difficult in reality, especially when a person is walking at a distance in read-world outdoor conditions. For optimal performance, the system should use as much information as possible from the observations. In this paper, we propose an innovative system, which combines cues of face profile and gait silhouette from the single camera video sequences. For optimal face profile recognition, we first reconstruct a high-resolution face profile image from several adjacent low-resolution video frames. Then we use a curvature-based matching method for recognition. For gait, we use Gait Energy Image (GEI) to characterize human walking properties. Recognition is carried out based on the direct GEI matching. Several schemes are considered for fusion of face profile and gait. A number of dynamic video sequences are tested to evaluate the performance of our system. Experiment results are compared and discussed.

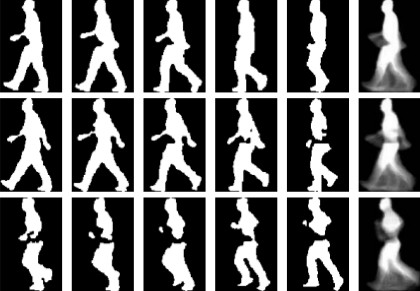

Gait recognition by combining classifiers based on environmental contexts

.png) Human gait properties can be affected by various environmental contexts such as walking surface and carrying objects. In this paper, we propose a novel approach for individual recognition by combining different gait classifiers with the knowledge of environmental contexts to improve the recognition performance. Different classifiers are designed to handle different environmental contexts, and context specific features are explored for context characterization. In the recognition procedure, we can determine the probability of environmental contexts in any probe sequence according to its context features, and apply the probabilistic classifier combination strategies for the recognition. Experimental results demonstrate the effectiveness of the proposed approach.

Human gait properties can be affected by various environmental contexts such as walking surface and carrying objects. In this paper, we propose a novel approach for individual recognition by combining different gait classifiers with the knowledge of environmental contexts to improve the recognition performance. Different classifiers are designed to handle different environmental contexts, and context specific features are explored for context characterization. In the recognition procedure, we can determine the probability of environmental contexts in any probe sequence according to its context features, and apply the probabilistic classifier combination strategies for the recognition. Experimental results demonstrate the effectiveness of the proposed approach.

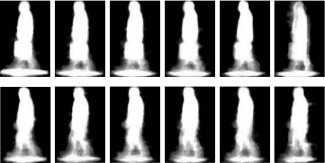

Human Activity Recognition in Thermal Infrared Imagery

Here we investigate human repetitive activity

properties from thermal infrared imagery, where human

motion can be easily detected from the background regardless

of lighting conditions and colors of the human surfaces

and backgrounds. We employ an efficient spatio-temporal

representation for human repetitive activity recognition,

which represents human motion sequence in a single

image while preserving some temporal information. A

statistical approach is used to extract features for activity

recognition. Experimental results show that the proposed

approach achieves good performance for human repetitive

activity recognition.

Here we investigate human repetitive activity

properties from thermal infrared imagery, where human

motion can be easily detected from the background regardless

of lighting conditions and colors of the human surfaces

and backgrounds. We employ an efficient spatio-temporal

representation for human repetitive activity recognition,

which represents human motion sequence in a single

image while preserving some temporal information. A

statistical approach is used to extract features for activity

recognition. Experimental results show that the proposed

approach achieves good performance for human repetitive

activity recognition.

Performance Prediction for Individual Recognition by Gait

Existing gait recognition approaches do not give their theoretical or experimental performance predictions.

Therefore, the discriminating power of gait as a feature for human recognition cannot be evaluated. We propose a kinematic-based approach to recognize human by gait. The proposed approach

estimates 3D human walking parameters by performing a least squares fit of the 3D kinematic model to the

2D silhouette extracted from a monocular image sequence.

Existing gait recognition approaches do not give their theoretical or experimental performance predictions.

Therefore, the discriminating power of gait as a feature for human recognition cannot be evaluated. We propose a kinematic-based approach to recognize human by gait. The proposed approach

estimates 3D human walking parameters by performing a least squares fit of the 3D kinematic model to the

2D silhouette extracted from a monocular image sequence.

Individual Recognition Using Gait Energy Image

.png) In this paper, we propose a new spatio-temporal gait representation, called Gait Energy Image (GEI), to characterize human walking properties for individual recognition by gait. To address the problem of the lack of training templates, we generate a series of new GEI templates by analyzing the human silhouette distortion under various conditions. Principal component analysis followed by multiple discriminant analysis are used for learning features from the expanded GEI training templates. Recognition is carried out based on the learned features. Experimental results show that the proposed GEI is an effective and efficient gait representation for individual recognition, and the proposed approach achieves highly competitive performance with respect to current gait recognition approaches.

In this paper, we propose a new spatio-temporal gait representation, called Gait Energy Image (GEI), to characterize human walking properties for individual recognition by gait. To address the problem of the lack of training templates, we generate a series of new GEI templates by analyzing the human silhouette distortion under various conditions. Principal component analysis followed by multiple discriminant analysis are used for learning features from the expanded GEI training templates. Recognition is carried out based on the learned features. Experimental results show that the proposed GEI is an effective and efficient gait representation for individual recognition, and the proposed approach achieves highly competitive performance with respect to current gait recognition approaches.

Statistical Feature Fusion for Gait-based Human Recognition

This paper presents a novel approach for human recognition

by combining statistical gait features from real and

synthetic templates. Real templates are directly computed

from training silhouette sequences, while synthetic templates

are generated from training sequences by simulating

silhouette distortion. A statistical feature extraction approach

is used for learning effective features from real and

synthetic templates. Features learned from real templates

characterize human walking properties provided in training

sequences, and features learned from synthetic templates

predict gait properties under other conditions. A feature fusion

strategy is therefore applied at the decision level to improve

recognition performance. We apply the proposed approach

to USF HumanID Database. Experimental results

demonstrate that the proposed fusion approach not only

achieves better performance than individual approaches,

but also provides large improvement in performance with

respect to the baseline algorithm.

This paper presents a novel approach for human recognition

by combining statistical gait features from real and

synthetic templates. Real templates are directly computed

from training silhouette sequences, while synthetic templates

are generated from training sequences by simulating

silhouette distortion. A statistical feature extraction approach

is used for learning effective features from real and

synthetic templates. Features learned from real templates

characterize human walking properties provided in training

sequences, and features learned from synthetic templates

predict gait properties under other conditions. A feature fusion

strategy is therefore applied at the decision level to improve

recognition performance. We apply the proposed approach

to USF HumanID Database. Experimental results

demonstrate that the proposed fusion approach not only

achieves better performance than individual approaches,

but also provides large improvement in performance with

respect to the baseline algorithm.

Gait Energy Image Representation: Comparative Performance Evaluation on USF HumanID Database

A new spatio-temporal gait representation, called Gait Energy Image (GEI), is proposed to

characterize human walking properties for individual recognition by gait. To address the problem of the lack

of training templates, we expand the training templates by analyzing the human silhouette distortion under various conditions.

Principal component analysis and multiple discriminant analysis are used for learning features from the expanded

GEI based gait recognition approaches with other gait recognition approaches on USF HumanID Database. Experimental

results show that the proposed GEI is an effective and efficient gait representation for individual recognition,

and the proposed approach achieves highly competitive performance with respect to current gait recognition

approaches.

A new spatio-temporal gait representation, called Gait Energy Image (GEI), is proposed to

characterize human walking properties for individual recognition by gait. To address the problem of the lack

of training templates, we expand the training templates by analyzing the human silhouette distortion under various conditions.

Principal component analysis and multiple discriminant analysis are used for learning features from the expanded

GEI based gait recognition approaches with other gait recognition approaches on USF HumanID Database. Experimental

results show that the proposed GEI is an effective and efficient gait representation for individual recognition,

and the proposed approach achieves highly competitive performance with respect to current gait recognition

approaches.

Human Recognition on Combining Kinematic and Stationary Features

Both the human motion characteristics and body part measurement are important cues for human recognition

at a distance. The former can be viewed as kinematic measurement while the latter is stationary measurement.

We propose a kinematic based approach to extract both kinematic and stationary features for human

recognition. The proposed approach first estimates 3D human walking parameters by fitting the 3D kinematic model to

the 2D silhouette extracted from a monocular image sequence. Kinematic and stationary features are then extracted

from the kinematic and stationary parameters, respectively, and used for human recognition separately. Next, we

discuss different strategies for combining kinematic and stationary features to make a decision. Experimental

results show a comparison of these combination strategies and demonstrate the improvement in performance

for human recognition.

Both the human motion characteristics and body part measurement are important cues for human recognition

at a distance. The former can be viewed as kinematic measurement while the latter is stationary measurement.

We propose a kinematic based approach to extract both kinematic and stationary features for human

recognition. The proposed approach first estimates 3D human walking parameters by fitting the 3D kinematic model to

the 2D silhouette extracted from a monocular image sequence. Kinematic and stationary features are then extracted

from the kinematic and stationary parameters, respectively, and used for human recognition separately. Next, we

discuss different strategies for combining kinematic and stationary features to make a decision. Experimental

results show a comparison of these combination strategies and demonstrate the improvement in performance

for human recognition.

Bayesian-based performance prediction for gait recognition

.png) Existing gait recognition approaches do not give their theoretical or experiential performance predictions. Therefore, the discriminating power of gait as a feature for human recognition cannot be evaluated. We first propose a kinematic-based approach to recognize humans by gait. The proposed. approach estimates 3D human walking parameters by performing a least squares fit of the 3D kinematic model to the 2D silhouette extracted from a monocular image sequence. Next, a Bayesian based statistical analysis is performed to evaluate the discriminating power of the extracted features. Through probabilistic simulation, we not only predict the probability of correct recognition (PCR) with regard to different within-class feature variance, but also obtain the upper bound on PCR with regard to different human silhouette resolutions. In addition, the maximum number of people in a database is obtained given the allowable error rate. This is extremely important for gait recognition in large databases.

Existing gait recognition approaches do not give their theoretical or experiential performance predictions. Therefore, the discriminating power of gait as a feature for human recognition cannot be evaluated. We first propose a kinematic-based approach to recognize humans by gait. The proposed. approach estimates 3D human walking parameters by performing a least squares fit of the 3D kinematic model to the 2D silhouette extracted from a monocular image sequence. Next, a Bayesian based statistical analysis is performed to evaluate the discriminating power of the extracted features. Through probabilistic simulation, we not only predict the probability of correct recognition (PCR) with regard to different within-class feature variance, but also obtain the upper bound on PCR with regard to different human silhouette resolutions. In addition, the maximum number of people in a database is obtained given the allowable error rate. This is extremely important for gait recognition in large databases.

Kinematic-based human motion analysis in infrared sequences

.png) In an infrared (IR) image sequence of human walking, the human silhouette can be reliably extracted from the background regardless of lighting conditions and colors of the human surfaces and backgrounds in most cases. Moreover, some important regions containing skin, such as face and hands, can be accurately detected in IR image sequences. In this paper, we propose a kinematic-based approach for automatic human motion analysis from IR image sequences. The proposed approach estimates 3D human walking parameters by performing a modified least squares fit of the 3D kinematic model to the 2D silhouette extracted from a monocular IR image sequence, where continuity and symmetry of human walking and detected hand regions are also considered in the optimization function. Experimental results show that the proposed approach achieves good performance in gait analysis with different view angles With respect to the walking direction, and is promising for further gait recognition.

In an infrared (IR) image sequence of human walking, the human silhouette can be reliably extracted from the background regardless of lighting conditions and colors of the human surfaces and backgrounds in most cases. Moreover, some important regions containing skin, such as face and hands, can be accurately detected in IR image sequences. In this paper, we propose a kinematic-based approach for automatic human motion analysis from IR image sequences. The proposed approach estimates 3D human walking parameters by performing a modified least squares fit of the 3D kinematic model to the 2D silhouette extracted from a monocular IR image sequence, where continuity and symmetry of human walking and detected hand regions are also considered in the optimization function. Experimental results show that the proposed approach achieves good performance in gait analysis with different view angles With respect to the walking direction, and is promising for further gait recognition.

Individual recognition by kinematic-based gait analysis

.png) Current gait recognition approaches only consider individuals walking frontopamllel to the image plane. This makes them inapplicoble for recognizing individuals walking from different angles with respect to the image plane. In this paper, we propose a kinematic-based approach to recognize individuals by gait. The proposed approach estimates 30 human walking parameters by performing a least squares fit of the 30 kinematic model to the 20 silhouette eztmctedfmm a monocular image sequence. A genetic algorithm is used for feature selection from the estimated parameters, and the individuals are then recognized from the feature vectors using a nearest neighbor method. Ezperimental results show that the proposed approach achieves good performance in recognizing individuals walking fmm different angles with respect to the image plane.

Current gait recognition approaches only consider individuals walking frontopamllel to the image plane. This makes them inapplicoble for recognizing individuals walking from different angles with respect to the image plane. In this paper, we propose a kinematic-based approach to recognize individuals by gait. The proposed approach estimates 30 human walking parameters by performing a least squares fit of the 30 kinematic model to the 20 silhouette eztmctedfmm a monocular image sequence. A genetic algorithm is used for feature selection from the estimated parameters, and the individuals are then recognized from the feature vectors using a nearest neighbor method. Ezperimental results show that the proposed approach achieves good performance in recognizing individuals walking fmm different angles with respect to the image plane.

|

|

We have proposed a new framework for age

classification based on human gait using the Hidden

Markov Model (HMM). To extract

appropriate gait features, we consider a contour

related method in terms of shape variations during

human walking. Then the image feature is transformed

to a lower-dimensional space by using the Frame to

Exemplar (FED) distance. A HMM is trained on the

FED vector sequences. Thus, the framework provides

flexibility in the selection of gait feature representation.

In addition, the framework is robust for classification

due to the statistical nature of HMM. The experimental

results show that video-based automatic age

classification from human gait is feasible and reliable.

We have proposed a new framework for age

classification based on human gait using the Hidden

Markov Model (HMM). To extract

appropriate gait features, we consider a contour

related method in terms of shape variations during

human walking. Then the image feature is transformed

to a lower-dimensional space by using the Frame to

Exemplar (FED) distance. A HMM is trained on the

FED vector sequences. Thus, the framework provides

flexibility in the selection of gait feature representation.

In addition, the framework is robust for classification

due to the statistical nature of HMM. The experimental

results show that video-based automatic age

classification from human gait is feasible and reliable.

The determination of ethnicity of an individual, as a soft

biometrics, can be very useful in a video-based surveillance

system. Currently, face is commonly used to determine the

ethnicity of a person. Up to now, gait has been used for

individual recognition and gender classification but not for

ethnicity determination. Gait Energy

Image (GEI) is used in this research to analyze the recognition power

of gait for ethnicity. Feature fusion, score fusion and decision

fusion from multiple views of gait are explored. For

the feature fusion, GEI images and camera views are put

together to render a third-order tensor (x; y; view). A multilinear

principal component analysis (MPCA) is used to extract

features from tensor objects which integrate all views.

For the score fusion, the similarity scores measured from

single views are combined with a weighted SUM rule. For

the decision fusion, ethnicity classification is realized on

each individual view first. The classification results are then

combined to make the final determination with a majority

vote rule. A database of 36 walking people (East Asian

and South American) was acquired from 7 different camera

views. The experimental results show that ethnicity can

be determined from human gait in video automatically. The

classification rate is improved by fusing multiple camera

views and a comparison among different fusion schemes

shows that the MPCA based feature fusion performs the

best.

The determination of ethnicity of an individual, as a soft

biometrics, can be very useful in a video-based surveillance

system. Currently, face is commonly used to determine the

ethnicity of a person. Up to now, gait has been used for

individual recognition and gender classification but not for

ethnicity determination. Gait Energy

Image (GEI) is used in this research to analyze the recognition power

of gait for ethnicity. Feature fusion, score fusion and decision

fusion from multiple views of gait are explored. For

the feature fusion, GEI images and camera views are put

together to render a third-order tensor (x; y; view). A multilinear

principal component analysis (MPCA) is used to extract

features from tensor objects which integrate all views.

For the score fusion, the similarity scores measured from

single views are combined with a weighted SUM rule. For

the decision fusion, ethnicity classification is realized on

each individual view first. The classification results are then

combined to make the final determination with a majority

vote rule. A database of 36 walking people (East Asian

and South American) was acquired from 7 different camera

views. The experimental results show that ethnicity can

be determined from human gait in video automatically. The

classification rate is improved by fusing multiple camera

views and a comparison among different fusion schemes

shows that the MPCA based feature fusion performs the

best.

.png) Video networks is an emerging interdisciplinary field with significant and exciting scientific and technological challenges. It has great promise in solving many real-world problems and enabling a broad range of applications, including smart homes, video surveillance, environment and traffic monitoring, elderly care, intelligent environments, and entertainment in public and private spaces. This paper provides an overview of the design of a wireless video network as an experimental environment, camera selection, hand-off and control, anomaly detection. It addresses challenging questions for individual identification using gait and face at a distance and present new techniques and their comparison for robust identification.

Video networks is an emerging interdisciplinary field with significant and exciting scientific and technological challenges. It has great promise in solving many real-world problems and enabling a broad range of applications, including smart homes, video surveillance, environment and traffic monitoring, elderly care, intelligent environments, and entertainment in public and private spaces. This paper provides an overview of the design of a wireless video network as an experimental environment, camera selection, hand-off and control, anomaly detection. It addresses challenging questions for individual identification using gait and face at a distance and present new techniques and their comparison for robust identification.

It has been challenging to recognize walking humans

at arbitrary poses from a single or small number of video

cameras. Attempts have been made mostly using a 2D

image/silhouette-based representation and a limited use of

3D kinematic model-based approaches. Unlike all the previous work in computer vision

and pattern recognition, the models of walking humans

are built using the sensed 3D range data at selected poses

without any markers. An instance of a walking individual

at a different pose is recognized using the 3D range data of

that pose. Both modeling and recognition of an individual

are done using the dense 3D range data. The proposed approach

first measures 3D human body data that consists of

the representative poses during a gait cycle. Next, a 3D human

body model is fitted to the body data using an approach

that overcomes the inherent gaps in the data and estimates

the body pose with high accuracy. A gait sequence is synthesized

by interpolation of joint positions and their movements

from the fitted body models. Both dynamic and static

gait features are obtained which are used to define a similarity

measure for an individual recognition in the database.

The experimental results show high recognition rates using

our range based 3D gait database.

It has been challenging to recognize walking humans

at arbitrary poses from a single or small number of video

cameras. Attempts have been made mostly using a 2D

image/silhouette-based representation and a limited use of

3D kinematic model-based approaches. Unlike all the previous work in computer vision

and pattern recognition, the models of walking humans

are built using the sensed 3D range data at selected poses

without any markers. An instance of a walking individual

at a different pose is recognized using the 3D range data of

that pose. Both modeling and recognition of an individual

are done using the dense 3D range data. The proposed approach

first measures 3D human body data that consists of

the representative poses during a gait cycle. Next, a 3D human

body model is fitted to the body data using an approach

that overcomes the inherent gaps in the data and estimates

the body pose with high accuracy. A gait sequence is synthesized

by interpolation of joint positions and their movements

from the fitted body models. Both dynamic and static

gait features are obtained which are used to define a similarity

measure for an individual recognition in the database.

The experimental results show high recognition rates using

our range based 3D gait database.

.png) This paper consider face, side face, gait and ear and their possible fusion for human recognition. It presents an overview of some of the techniques that we have developed for (a) super-resoulution-based face recognition in video, (b) gait-based recognition in video, (c) fusion of super-resolved side face and gait in video, (d) ear recognition in color/range images, and (e) fusion performance prediction and validation. It presents various real-world examples to illustrate the ideas and points out the relative merits of the approaches that are discussed.

This paper consider face, side face, gait and ear and their possible fusion for human recognition. It presents an overview of some of the techniques that we have developed for (a) super-resoulution-based face recognition in video, (b) gait-based recognition in video, (c) fusion of super-resolved side face and gait in video, (d) ear recognition in color/range images, and (e) fusion performance prediction and validation. It presents various real-world examples to illustrate the ideas and points out the relative merits of the approaches that are discussed.

Video-based human recognition at a distance remains a challenging problem for the fusion of multimodal

biometrics. We present a

new approach that utilizes and integrates information from side face and gait at the feature level. The features of

face and gait are obtained separately using principal component analysis (PCA) from enhanced side face image

(ESFI) and gait energy image (GEI), respectively. Multiple discriminant analysis (MDA) is employed on the

concatenated features of face and gait to obtain discriminating synthetic features. The experimental results demonstrate that the synthetic features, encoding both side face and gait

information, carry more discriminating power than the individual biometrics features, and the proposed feature

level fusion scheme outperforms the match score level and another feature level fusion scheme.

Video-based human recognition at a distance remains a challenging problem for the fusion of multimodal

biometrics. We present a

new approach that utilizes and integrates information from side face and gait at the feature level. The features of

face and gait are obtained separately using principal component analysis (PCA) from enhanced side face image

(ESFI) and gait energy image (GEI), respectively. Multiple discriminant analysis (MDA) is employed on the

concatenated features of face and gait to obtain discriminating synthetic features. The experimental results demonstrate that the synthetic features, encoding both side face and gait

information, carry more discriminating power than the individual biometrics features, and the proposed feature

level fusion scheme outperforms the match score level and another feature level fusion scheme.

We have introduced a new video-based recognition method to recognize noncooperating individuals at a

distance in video who expose side views to the camera. Information from two biometrics sources, side face

and gait, is utilized and integrated for recognition. For side face, an enhanced side-face image (ESFI), a

higher resolution image compared with the image directly obtained from a single video frame, is constructed.

For gait, the gait energy image (GEI),

a spatiotemporal compact representation of gait in video, is used to characterize human-walking properties.

The experimental results show that the idea of constructing

ESFI from multiple frames is promising for human recognition in video, and better face features are extracted

from ESFI compared to those from the original side-face images (OSFIs).

We have introduced a new video-based recognition method to recognize noncooperating individuals at a

distance in video who expose side views to the camera. Information from two biometrics sources, side face

and gait, is utilized and integrated for recognition. For side face, an enhanced side-face image (ESFI), a

higher resolution image compared with the image directly obtained from a single video frame, is constructed.

For gait, the gait energy image (GEI),

a spatiotemporal compact representation of gait in video, is used to characterize human-walking properties.

The experimental results show that the idea of constructing

ESFI from multiple frames is promising for human recognition in video, and better face features are extracted

from ESFI compared to those from the original side-face images (OSFIs).

We propose a new spatio-temporal gait representation,

called Gait Energy Image (GEI), to characterize

human walking properties for individual recognition by

gait. To address the problem of the lack of training templates,

we generate a series of new GEI templates by analyzing

the human silhouette distortion under various conditions.

Principal component analysis followed by multiple

discriminant analysis are used for learning features from

the expanded GEI training templates. Recognition is carried

out based on the learned features. Experimental results

show that the proposed GEI is an effective and efficient gait

representation for individual recognition, and the proposed

approach achieves highly competitive performance with respect

to current gait recognition approaches.

We propose a new spatio-temporal gait representation,

called Gait Energy Image (GEI), to characterize

human walking properties for individual recognition by

gait. To address the problem of the lack of training templates,

we generate a series of new GEI templates by analyzing

the human silhouette distortion under various conditions.

Principal component analysis followed by multiple

discriminant analysis are used for learning features from

the expanded GEI training templates. Recognition is carried

out based on the learned features. Experimental results

show that the proposed GEI is an effective and efficient gait

representation for individual recognition, and the proposed

approach achieves highly competitive performance with respect

to current gait recognition approaches.

Image registration between color and thermal images is a challenging

problem due to the difficulties associated with finding correspondence. However, the moving people in a

static scene provide cues to address this problem. We propose a hierarchical scheme to

automatically find the correspondence between the preliminary human silhouettes extracted from synchronous

color and thermal image sequences for image registration. It is shown

that the proposed approach achieves good results for image registration and human silhouette extraction.

Experimental results also show a comparison of various sensor fusion strategies and demonstrate the

improvement in performance over non-fused cases for human silhouette extraction.

Image registration between color and thermal images is a challenging

problem due to the difficulties associated with finding correspondence. However, the moving people in a

static scene provide cues to address this problem. We propose a hierarchical scheme to

automatically find the correspondence between the preliminary human silhouettes extracted from synchronous

color and thermal image sequences for image registration. It is shown

that the proposed approach achieves good results for image registration and human silhouette extraction.

Experimental results also show a comparison of various sensor fusion strategies and demonstrate the

improvement in performance over non-fused cases for human silhouette extraction.

A new video based recognition method is presented

to recognize non-cooperating individuals at a distance in

video, who expose side views to the camera. Information

from two biometric sources, side face and gait, is utilized

and integrated at feature level. For face, a high-resolution

side face image is constructed from multiple video frames.

For gait, Gait Energy Image (GEI), a spatio-temporal compact

representation of gait in video, is used to characterize

human walking properties. Face features and gait features

are obtained separately using Principal Component Analysis

(PCA) and Multiple Discriminant Analysis (MDA) combined

method from the high-resolution side face image and

Gait Energy Image (GEI), respectively. The system is tested

on a database of video sequences corresponding to 46 people.

The results showed that the integrated face and gait

features carry the most discriminating power compared to

any individual biometric.

A new video based recognition method is presented

to recognize non-cooperating individuals at a distance in

video, who expose side views to the camera. Information

from two biometric sources, side face and gait, is utilized

and integrated at feature level. For face, a high-resolution

side face image is constructed from multiple video frames.

For gait, Gait Energy Image (GEI), a spatio-temporal compact

representation of gait in video, is used to characterize

human walking properties. Face features and gait features

are obtained separately using Principal Component Analysis

(PCA) and Multiple Discriminant Analysis (MDA) combined

method from the high-resolution side face image and

Gait Energy Image (GEI), respectively. The system is tested

on a database of video sequences corresponding to 46 people.

The results showed that the integrated face and gait

features carry the most discriminating power compared to

any individual biometric.

We present a novel approach based on

gait energy image (GEI) and co-evolutionary genetic

programming (CGP) for human activity classification.

Specifically, Hu’s moment and normalized histogram

bins are extracted from the original GEIs as input

features. CGP is employed to reduce the feature

dimensionality and learn the classifiers. The strategy

of majority voting is applied to the CGP to improve the

overall performance in consideration of the

diversification of genetic programming. This learning based

approach improves the classification accuracy

by approximately 7 percent in comparison to the

traditional classifiers.

We present a novel approach based on

gait energy image (GEI) and co-evolutionary genetic

programming (CGP) for human activity classification.

Specifically, Hu’s moment and normalized histogram

bins are extracted from the original GEIs as input

features. CGP is employed to reduce the feature

dimensionality and learn the classifiers. The strategy

of majority voting is applied to the CGP to improve the

overall performance in consideration of the

diversification of genetic programming. This learning based

approach improves the classification accuracy

by approximately 7 percent in comparison to the

traditional classifiers.

We introduce a new video based recognition

method to recognize non-cooperating individuals at a distance

in video, who expose side views to the camera. Information

from two biometric sources, side face and gait,

is utilized and integrated for recognition. For side face, we

construct Enhanced Side Face Image (ESFI), a higher resolution

image compared with the image directly obtained

from a single video frame, to fuse information of face from

multiple video frames. For gait, we use Gait Energy Image

(GEI), a spatio-temporal compact representation of gait in

video, to characterize human walking properties. The features

of face and the features of gait are obtained separately

using Principal Component Analysis (PCA) and Multiple

Discriminant Analysis (MDA) combined method from ESFI

and GEI, respectively. They are then integrated at match

score level. Our approach is tested on a database of video

sequences corresponding to 46 people. The different fusion

methods are compared and analyzed. The experimental results

show that (a) Integrated information from side face

and gait is effective for human recognition in video; (b) The

idea of constructing ESFI from multiple frames is promising

for human recognition in video and better face features are

extracted from ESFI compared to those from original face

images.

We introduce a new video based recognition

method to recognize non-cooperating individuals at a distance

in video, who expose side views to the camera. Information

from two biometric sources, side face and gait,

is utilized and integrated for recognition. For side face, we

construct Enhanced Side Face Image (ESFI), a higher resolution

image compared with the image directly obtained

from a single video frame, to fuse information of face from

multiple video frames. For gait, we use Gait Energy Image

(GEI), a spatio-temporal compact representation of gait in

video, to characterize human walking properties. The features

of face and the features of gait are obtained separately

using Principal Component Analysis (PCA) and Multiple

Discriminant Analysis (MDA) combined method from ESFI

and GEI, respectively. They are then integrated at match

score level. Our approach is tested on a database of video

sequences corresponding to 46 people. The different fusion

methods are compared and analyzed. The experimental results

show that (a) Integrated information from side face

and gait is effective for human recognition in video; (b) The

idea of constructing ESFI from multiple frames is promising

for human recognition in video and better face features are

extracted from ESFI compared to those from original face

images.

Most gait recognition approaches only study human walking

fronto parallel to the image plane which is not realistic

in video surveillance applications. Human gait appearance

depends on various factors including locations of the camera

and the person, the camera axis and the walking direction.

By analyzing these factors, we propose a statistical

approach for view-insensitive gait recognition. The proposed

approach recognizes human using a single camera,

and avoids the difficulties of recovering the human body

structure and camera calibration. Experimental results show

that the proposed approach achieves good performance in

recognizing individuals walking along different directions.

Most gait recognition approaches only study human walking

fronto parallel to the image plane which is not realistic

in video surveillance applications. Human gait appearance

depends on various factors including locations of the camera

and the person, the camera axis and the walking direction.

By analyzing these factors, we propose a statistical

approach for view-insensitive gait recognition. The proposed

approach recognizes human using a single camera,

and avoids the difficulties of recovering the human body

structure and camera calibration. Experimental results show

that the proposed approach achieves good performance in

recognizing individuals walking along different directions.

.png) Human recognition from arbitrary views is an important task for many applications, such as visual surveillance, covert security and access control. It has been found to be very difficult in reality, especially when a person is walking at a distance in read-world outdoor conditions. For optimal performance, the system should use as much information as possible from the observations. In this paper, we propose an innovative system, which combines cues of face profile and gait silhouette from the single camera video sequences. For optimal face profile recognition, we first reconstruct a high-resolution face profile image from several adjacent low-resolution video frames. Then we use a curvature-based matching method for recognition. For gait, we use Gait Energy Image (GEI) to characterize human walking properties. Recognition is carried out based on the direct GEI matching. Several schemes are considered for fusion of face profile and gait. A number of dynamic video sequences are tested to evaluate the performance of our system. Experiment results are compared and discussed.

Human recognition from arbitrary views is an important task for many applications, such as visual surveillance, covert security and access control. It has been found to be very difficult in reality, especially when a person is walking at a distance in read-world outdoor conditions. For optimal performance, the system should use as much information as possible from the observations. In this paper, we propose an innovative system, which combines cues of face profile and gait silhouette from the single camera video sequences. For optimal face profile recognition, we first reconstruct a high-resolution face profile image from several adjacent low-resolution video frames. Then we use a curvature-based matching method for recognition. For gait, we use Gait Energy Image (GEI) to characterize human walking properties. Recognition is carried out based on the direct GEI matching. Several schemes are considered for fusion of face profile and gait. A number of dynamic video sequences are tested to evaluate the performance of our system. Experiment results are compared and discussed.

.png) Human gait properties can be affected by various environmental contexts such as walking surface and carrying objects. In this paper, we propose a novel approach for individual recognition by combining different gait classifiers with the knowledge of environmental contexts to improve the recognition performance. Different classifiers are designed to handle different environmental contexts, and context specific features are explored for context characterization. In the recognition procedure, we can determine the probability of environmental contexts in any probe sequence according to its context features, and apply the probabilistic classifier combination strategies for the recognition. Experimental results demonstrate the effectiveness of the proposed approach.

Human gait properties can be affected by various environmental contexts such as walking surface and carrying objects. In this paper, we propose a novel approach for individual recognition by combining different gait classifiers with the knowledge of environmental contexts to improve the recognition performance. Different classifiers are designed to handle different environmental contexts, and context specific features are explored for context characterization. In the recognition procedure, we can determine the probability of environmental contexts in any probe sequence according to its context features, and apply the probabilistic classifier combination strategies for the recognition. Experimental results demonstrate the effectiveness of the proposed approach.

Here we investigate human repetitive activity

properties from thermal infrared imagery, where human

motion can be easily detected from the background regardless

of lighting conditions and colors of the human surfaces

and backgrounds. We employ an efficient spatio-temporal

representation for human repetitive activity recognition,

which represents human motion sequence in a single

image while preserving some temporal information. A

statistical approach is used to extract features for activity

recognition. Experimental results show that the proposed

approach achieves good performance for human repetitive

activity recognition.

Here we investigate human repetitive activity

properties from thermal infrared imagery, where human

motion can be easily detected from the background regardless

of lighting conditions and colors of the human surfaces

and backgrounds. We employ an efficient spatio-temporal

representation for human repetitive activity recognition,

which represents human motion sequence in a single

image while preserving some temporal information. A

statistical approach is used to extract features for activity

recognition. Experimental results show that the proposed

approach achieves good performance for human repetitive

activity recognition.

Existing gait recognition approaches do not give their theoretical or experimental performance predictions.

Therefore, the discriminating power of gait as a feature for human recognition cannot be evaluated. We propose a kinematic-based approach to recognize human by gait. The proposed approach

estimates 3D human walking parameters by performing a least squares fit of the 3D kinematic model to the

2D silhouette extracted from a monocular image sequence.

Existing gait recognition approaches do not give their theoretical or experimental performance predictions.

Therefore, the discriminating power of gait as a feature for human recognition cannot be evaluated. We propose a kinematic-based approach to recognize human by gait. The proposed approach

estimates 3D human walking parameters by performing a least squares fit of the 3D kinematic model to the

2D silhouette extracted from a monocular image sequence.

.png) In this paper, we propose a new spatio-temporal gait representation, called Gait Energy Image (GEI), to characterize human walking properties for individual recognition by gait. To address the problem of the lack of training templates, we generate a series of new GEI templates by analyzing the human silhouette distortion under various conditions. Principal component analysis followed by multiple discriminant analysis are used for learning features from the expanded GEI training templates. Recognition is carried out based on the learned features. Experimental results show that the proposed GEI is an effective and efficient gait representation for individual recognition, and the proposed approach achieves highly competitive performance with respect to current gait recognition approaches.

In this paper, we propose a new spatio-temporal gait representation, called Gait Energy Image (GEI), to characterize human walking properties for individual recognition by gait. To address the problem of the lack of training templates, we generate a series of new GEI templates by analyzing the human silhouette distortion under various conditions. Principal component analysis followed by multiple discriminant analysis are used for learning features from the expanded GEI training templates. Recognition is carried out based on the learned features. Experimental results show that the proposed GEI is an effective and efficient gait representation for individual recognition, and the proposed approach achieves highly competitive performance with respect to current gait recognition approaches.

This paper presents a novel approach for human recognition

by combining statistical gait features from real and

synthetic templates. Real templates are directly computed

from training silhouette sequences, while synthetic templates

are generated from training sequences by simulating

silhouette distortion. A statistical feature extraction approach

is used for learning effective features from real and

synthetic templates. Features learned from real templates

characterize human walking properties provided in training

sequences, and features learned from synthetic templates

predict gait properties under other conditions. A feature fusion

strategy is therefore applied at the decision level to improve

recognition performance. We apply the proposed approach

to USF HumanID Database. Experimental results

demonstrate that the proposed fusion approach not only

achieves better performance than individual approaches,

but also provides large improvement in performance with

respect to the baseline algorithm.

This paper presents a novel approach for human recognition

by combining statistical gait features from real and